How We Launch and Manage Quality of Data Annotation Projects at Mindy Support

Category: AI Insights

Published date: 12.03.2021

Read time: 8 min

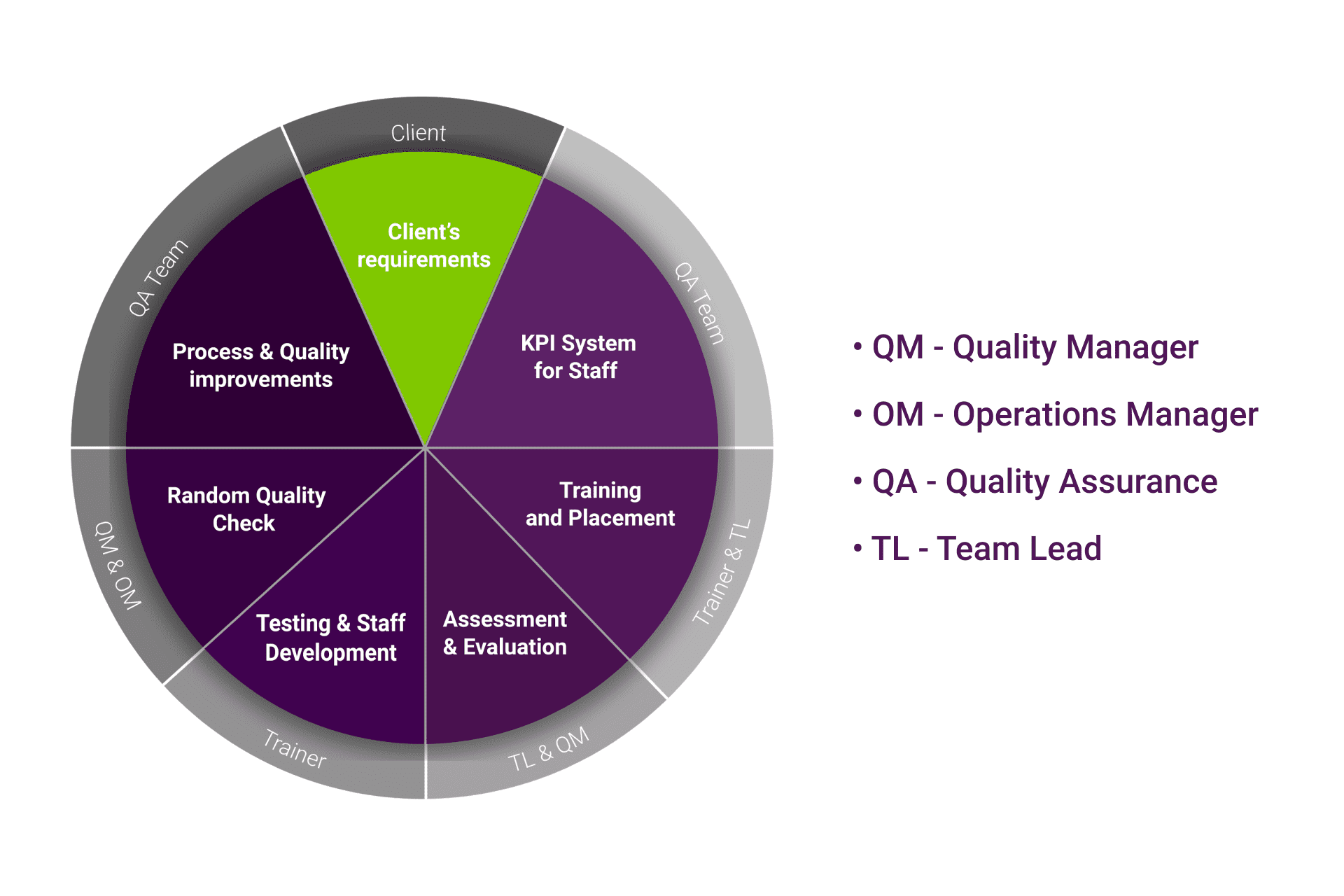

There are a lot of different factors that must be accounted for when launching a data annotation project. This includes things like learning about the requirements, tools selection, recruiting, and many other things. In addition to this, it is also very important to make sure that all of the tasks are being completed with the necessary level of quality for the entire duration of the project. Since Mindy Support has extensive experience implementing data annotation projects of various sizes and complexities, we have developed a process that we follow when taking on a new project. Today we would like to share with you some of the steps we follow when implementing new projects and how we ensure the highest quality of the annotation work.

The Pre-launch Phase

Even before the project gets started we assign a dedicated project manager and quality assurance manager to each project. They will be responsible for studying all of the instructions provided by the client and getting answers to any questions that may arise about the requirements. We then get acquainted with the client’s tool. If no tool was specified, we configure our internal tools or other open-source ones. Regardless of the tool, we are using, we make sure to learn about all of the technical details that are important to the client. This includes things like the import and export formats and many other things. The quality assurance manager will then work with the tool themselves and complete a task just to make sure that everything is working correctly.

Launching the Project

Once all of the pre-launch processes have been completed, we can start recruiting the data annotators taking into account all of the requirements of the project as well as for the data annotators themselves. The Quality Manager, either by themselves or with the project manager, will then conduct training for the team lead and the data annotators. After all of the training has been completed, we test their knowledge of the project to make sure they understood all of the instructions correctly. Only after all of the instructional phases have been completed can the data annotators actually start working.

Ensuring the Quality of the Work Done

Every day, usually in the mornings, the quality of the data annotation will be checked by the team lead, QA manager, and the quality manager, and sometimes even by the operations manager. The amount of work they check can range from 10-100% of the data annotation work depending on the quality requirements and the QA phases paid for by the client. The quality manager and the team lead are constantly supporting the data annotators throughout the duration of the project and answer any questions they may have.

Sometimes we encounter situations where the quality score of an individual data annotator does not correspond to our requirements. In such cases we conduct additional training for the data annotator and if their score does not improve we may decide to replace them with another data annotator. Upon the conclusion of the project, we conduct yet another QA inspection also between 10-100% of the total work done to make sure that we have reached all of the KPIs set out by the client. After everything has been said and done, all of the data is sent to the client.

Redoing Some of the Work, if Needed

If the client does not accept the data annotation work we have performed due to low-quality scores, the quality manager and the project manager will look into all of the mistakes that were sent to us by the client. The project manager will ask the client any questions they may have about such mistakes. If the amount of rework needed is fairly small, the QA manager or the quality manager will do this work themselves. If large volumes of the work need to be redone, then we conduct additional training for the team lead and the data annotators where we discuss the client’s comments about the result, any QA reports available, and explain all of the mistakes that were made during the processing of the project and answer all the questions that arise.

After the training, the data annotators are tested once again to make sure they correctly understand all of the instructions. The data annotators will then start working on the project with the same QA processes performed by the team lead, quality manager, and other stakeholders involved. At this stage, the volume of work they check will range from 10-20% of the total work required for the project. The team leads and project managers are always available to answer any questions the data annotators may have. After the rework has been com[leted, we send the data to the client.

Additional Ways We Ensure the Highest Quality Work

For one of your largest and longest-running projects, we have trainers who teach new data annotators how to process images and, In the future, such trainers will be involved in other projects as well. When checking the quality, we try to check each data annotator on a daily basis, so that in the event they are making a lot of mistakes, they could be identified right away. We also test each data annotator on a weekly basis, on all tasks, to make sure they properly understand all of the instructions.

Calibration calls with the team leads are also held where all the smallest nuances of the tasks are analyzed in detail. We send weekly reports, with quality indicators, on the project or task, and the report describes the problems that cause quality problems and how to fix them. The data annotators always have access to a chat where they can ask the team lead or the quality manager any questions they may have. We also record all kinds of videos to better explain the instructions and if during the work on the project or task, some unclear points arise, they are all clarified with the client.

We Make Sure to Get the Job Done Right the First Time

As we have seen from all of the information presented above, we have extended QA measures and training processes in place to smoothly implement your project and make sure that all of the work is done right the first time. Also, since we are one of the largest BPO providers in Eastern Europe with more than 2,000 employees in six locations all over Ukraine, we are able to scale your project while making sure to follow all of the QA processes. Contact us today to learn about how we can help you.