Quality Assurance Services

Boost your AI project’s accuracy with our quality validation and assurance services.

Contact us today to enhance your data quality!

Quality Excellence

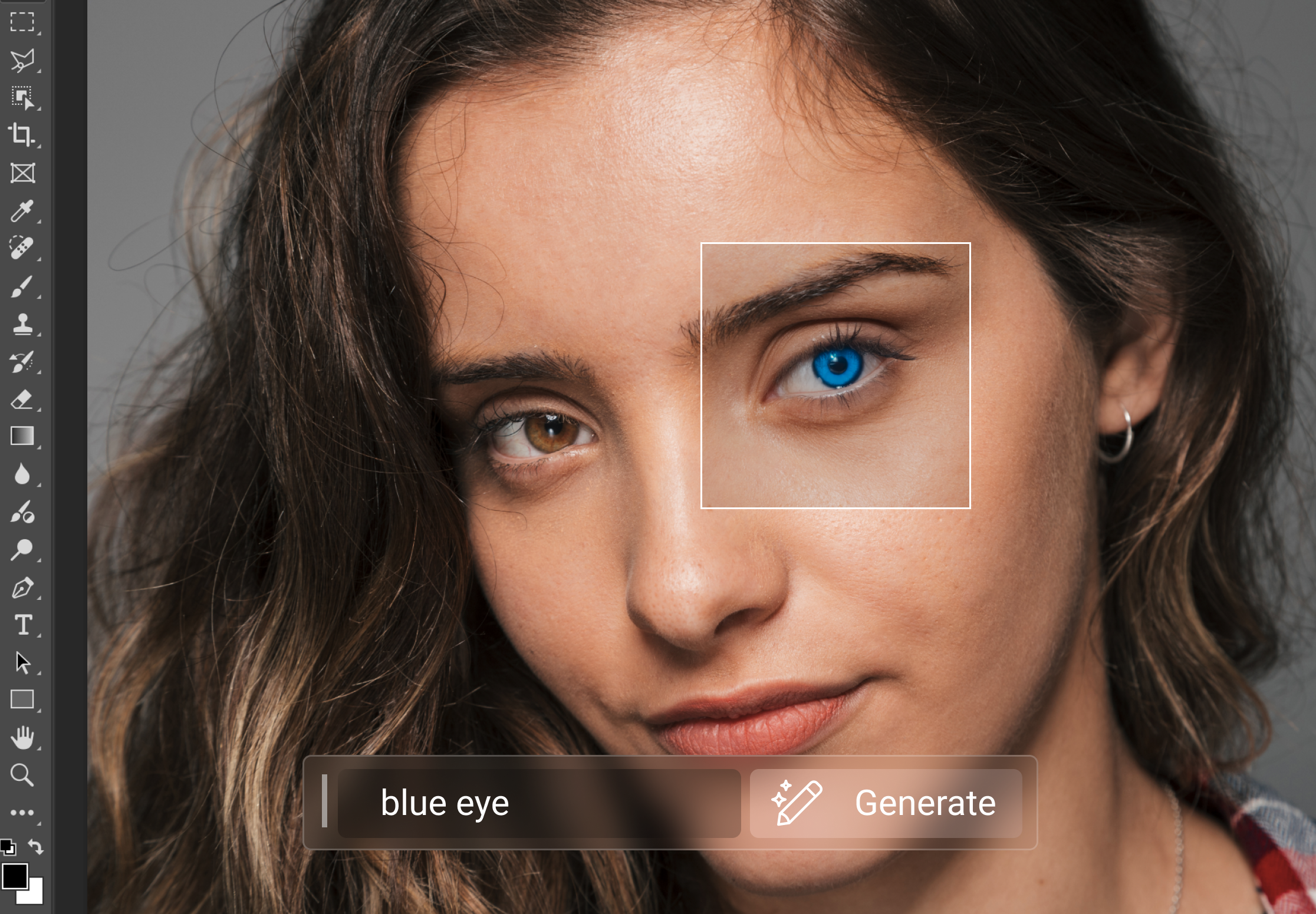

Quality assurance services for AI projects ensure the reliability, accuracy, and ethical compliance of AI systems, enhancing performance and reducing risks of errors or biases

Quality Validation

Our quality validation services rigorously check the correctness of annotations according to provided guidelines, ensuring adherence to project standards. We identify and correct mistakes in annotations, improving data accuracy. Additionally, we add missed annotations, elevating the overall quality and consistency of the dataset.

Quality Assurance

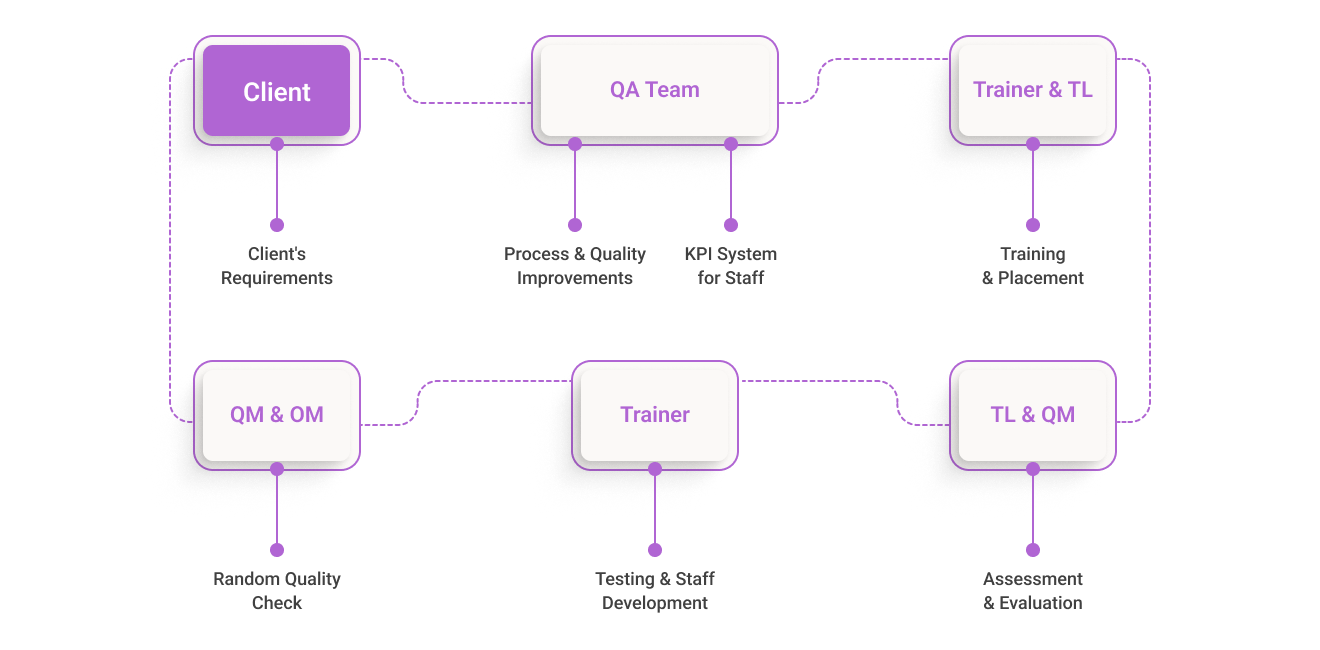

QUALITY MANAGEMENT METHODOLOGY

This comprehensive quality management methodology allows us to achieve a quality level of 99+%, which is confirmed by our customers.

Let’s Collaborate

GET A QUOTEYour Benefits

There are many benefits to trusting us with your quality assurance needs. These include:

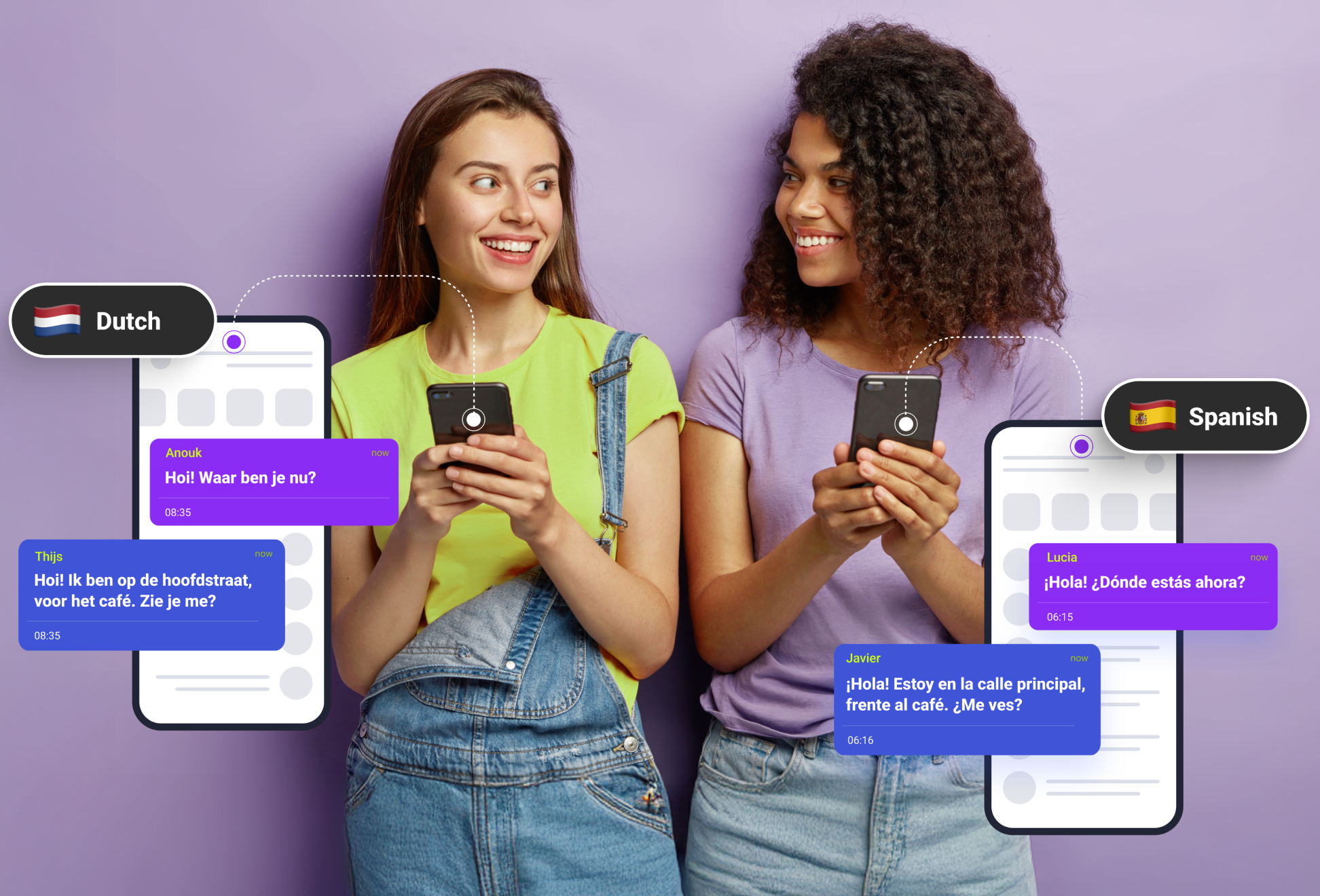

Our Global Presence

Since Mindy Support has office locations all over the world, we are able to provide worldwide recruiting and multilingual services in 40+ languages. Having such a wide global footprint allows us to cover all data annotation services needed to develop generative AI LLM solutions.

Case Studies

Our Clients

Our Customers Say

Build a tailor-made team based on your needs

We have a minimum threshold for starting any new project, which is 735 productive man-hours a month (equivalent to 5 graphic annotators working on the task monthly).