How Machine Learning in Automotive Makes Self-Driving Cars a Reality

The development of self-driving cars is one of the most trendy and popular directions in the world of AI and machine learning. In 2020, we saw advancements from companies like Waymo that allow customers to hail self-driving taxis, a service called Waymo One. Alibaba’s AutoX launched a fleet of fully automated cars in Shenzhen that have no accompanying safety drivers. Automotive Artificial Intelligence is rapidly displacing human drivers by enabling self-driving cars that use sensors to gather data about their surroundings. But how do self-driving cars interpret that data? This is the biggest use case of machine learning in automotive.

How self-driving cars make decisions

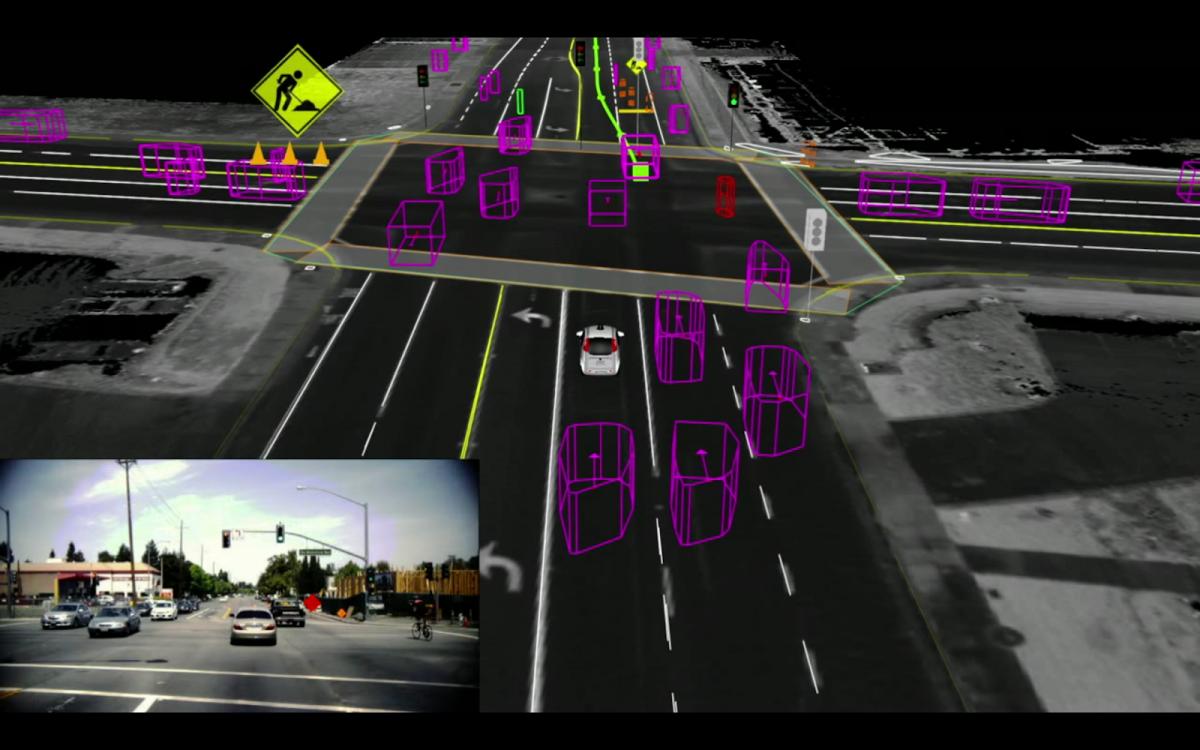

Driverless cars can identify objects, interpret situations, and make decisions based on object detection and object classification algorithms. They do this by detecting objects, classifying them, and interpreting what they are. Mindy Support provides comprehensive data annotation services to help train the machine learning algorithm to make the right decisions when navigating the roads

Diversity and redundancy

Machine learning is accomplished through a fusion of many algorithms that overlap to minimize failure and ensure safety. These algorithms interpret road signs, identify lanes, and recognize crossroads.

How does a self-driving car see?

The three major sensors used by self-driving cars work together as the human eyes and brain. These sensors are cameras, radar, and lidar. Together, they give the car a clear view of its environment. They help the car to identify the location, speed, and 3D shapes of objects that are close to it. Additionally, self-driving cars are now being built with inertial measurement units that monitor and control both acceleration and location.

Reliable cameras

Self-driving cars have a number of cameras at every angle for a perfect view of their surroundings. While some cameras have a broader field of view of about 120 degrees, others have a narrower view for long-distance vision. Fish-eye cameras provide extensive visuals for parking purposes.

Radar detectors

Radar detectors augment the efforts of camera sensors at night or whenever visibility is poor. They send pulses of radio waves to locate an object and send back signals about the speed and location of that object.

Laser focus

Lidar sensors calculate distance through pulsed lasers, by empowering driverless cars with 3D visuals of their surroundings, adding richer information about shape and depth.

LiDAR

LiDAR is one of the most important technologies used in the development of self-driving vehicles. Basically, it is a device that sends out pulses of light that bounce off an object and returns back to the LiDAR sensor which determines its distance. The LiDAR produces a 3D Point Cloud which is a digital representation of the way the car sees the physical world.

Tesla vs. The Rest of the Field

It is worth noting that there is a significant debate in the world of autonomous vehicle development between Tesla and other self-driving car manufacturers. Industry leaders like Waymo and pretty much everybody else is using LiDAR sensors, except for Tesla. They are using a system of cameras, called Hydranet, which is a network of eight cameras all over the vehicle and the AI system stitches together all of the images to allow the vehicle to see the road and its surroundings. One of the reasons Tesla is avoiding LiDAR is because it is a bulky object that sits on the roof of the car and detracts from the aesthetics of the vehicle itself. Interestingly enough, a recent Forbes article says that even Tesla may have come around to LiDAR, but we will have to wait and see.

The importance of Data Annotation in Automotive AI Projects

In the previous section we talked about some of the ways AI-powered vehicles see the physical world, but how are they able to identify things like street signs, other cars, road markings and many other things encountered on the road? This is where data annotation plays a crucial role. This is when all of the raw training data is prepared through various annotation methods that allow the AI-system to understand what it needs to learn. For the automotive sector, the most common data annotation methods include 3D Point Cloud annotation, video labeling, full scene segmentation and many others.

One of the most interesting cases Mindy Support has recently worked on involves tracking driver eye movements to determine the driver’s condition. For example, it could detect whether or not the driver is feeling drowsy, under the influence of a substance and many other conditions. The system would need to be able to navigate its way within the surrounding environment, properly identify all of the objects on the road and take the necessary actions. This project required a significant amount of data annotation. In fact, we annotated about 100,000 unique videos to help the client get this project done.

The quality of the data annotation is very important since it will ultimately determine the accuracy and the ability of the vehicle to navigate its surroundings and also let’s not forget that people’s lives are at stake here. After all, one of the major goals of self-driving cars is increased safety since 94% of serious crashes are the result of human error. The goal here is to reduce the human factor in driving and make the car as accurate and safe as possible.

Learn more how Mindy Support manages the quality of data annotation projects.

How automotive Artificial Intelligence algorithms are used for self-driving cars

To empower self-driving cars to make decisions, machine learning algorithms are trained based on real-life datasets.

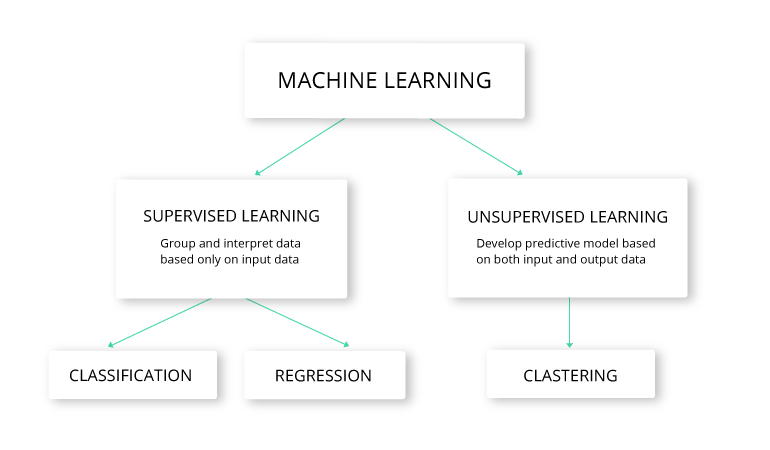

Supervised vs unsupervised learning

Machine learning has two learning models: supervised and unsupervised. With unsupervised learning, a machine learning algorithm receives unlabeled data and no instructions on how to process it, so it has to figure out what to do on its own.

With the supervised model, an algorithm is fed instructions on how to interpret the input data. This is the preferred approach to learning for self-driving cars. It allows the algorithm to evaluate training data based on a fully labelled dataset, making supervised learning more useful where classification is concerned.

Machine learning algorithms used by self-driving cars

SIFT (scale-invariant feature transform) for feature extraction

SIFT algorithms detect objects and interpret images. For example, for a triangular sign, the three points of the sign are entered as features. A car can then easily identify the sign using those points.

AdaBoost for data classification

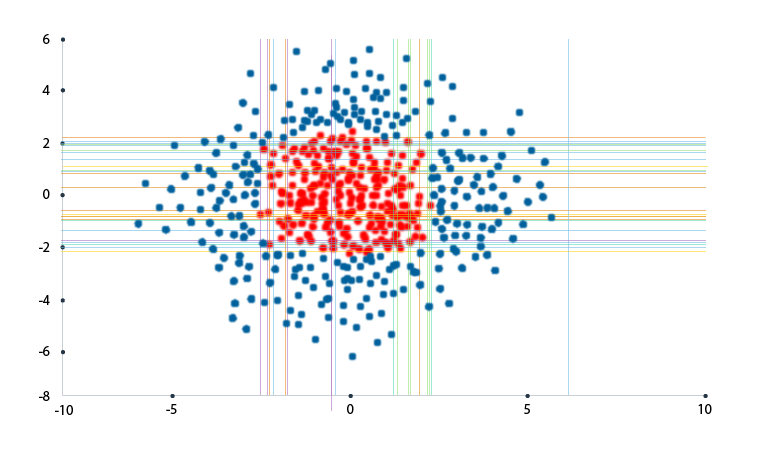

This algorithm collects data and classifies it to boost the learning process and performance of vehicles. It groups different low-performing classifiers to get a single high-performing classifier for better decision-making.

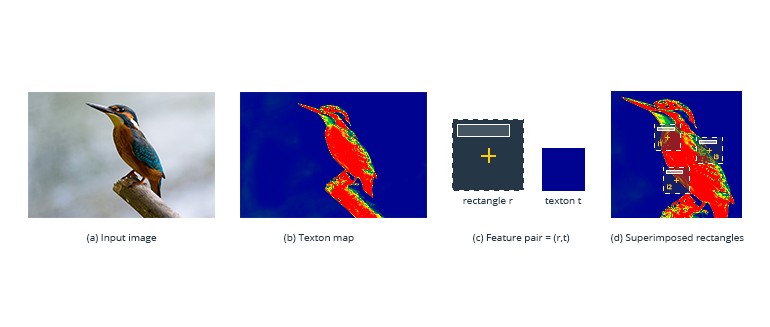

TextonBoost for object recognition

The TextonBoost algorithm does a similar job to AdaBoost, only it receives data from shape, context, and appearance to increase learning with textons (micro-structures in images). It aggregates visual data with common features.

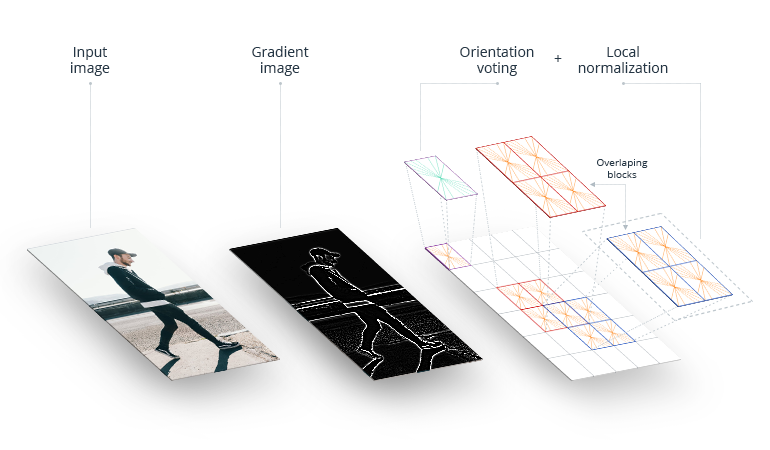

Histogram of oriented gradients (HOG)

HOG facilitates the analysis of an object’s location, called a cell, to find out how the object changes or moves.

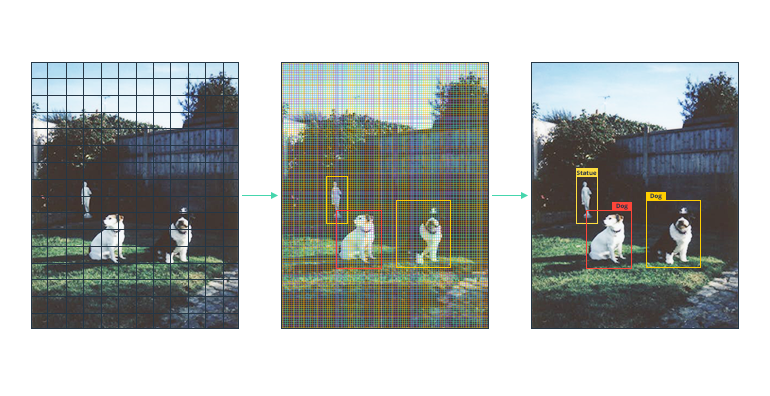

YOLO (You Only Look Once)

This algorithm detects and groups objects like humans, trees, and vehicles. It assigns specific features to each class of object that it groups to help the car easily identify them. YOLO is best for identifying and grouping objects.

Wrap-up

Machine learning algorithms make it possible for self-driving cars to exist. They allow a car to collect data on its surroundings from cameras and other sensors, interpret it, and decide what actions to take. Machine learning even allows cars to learn how to perform these tasks as good as (or even better than) humans.

This leads to the reasonable conclusion that machine learning algorithms and autonomous vehicles are the future of transportation.

At Mindy Support, we agree. Over the years, we’ve built teams to annotate data for automotive AI solutions. If you’re creating an automotive AI system, send a message to [email protected] or click the build me a team button.