Increasing Driver Safety With AI

Accidents and unsafe driving patterns are always something to watch out for on the road. The advent of artificial intelligence in recent years has updated the vehicle-aided driving system’s pre-existing driving mode. The technology instantly reminds drivers to perform proper maneuvers by gathering real-time information about the state of the road, thereby preventing car accidents caused by driver tiredness. The creation of autonomous vehicles necessitates the quick and precise identification of traffic signs from digital imagery in addition to the auxiliary driving systems.

Researchers are working on finding AI-powered solutions that will help increase driver safety, which includes both ADAS technologies and autonomous driving cars as well. In this article, we will take a look at some of the ways AI is making driving safer and the data annotation required to create it.

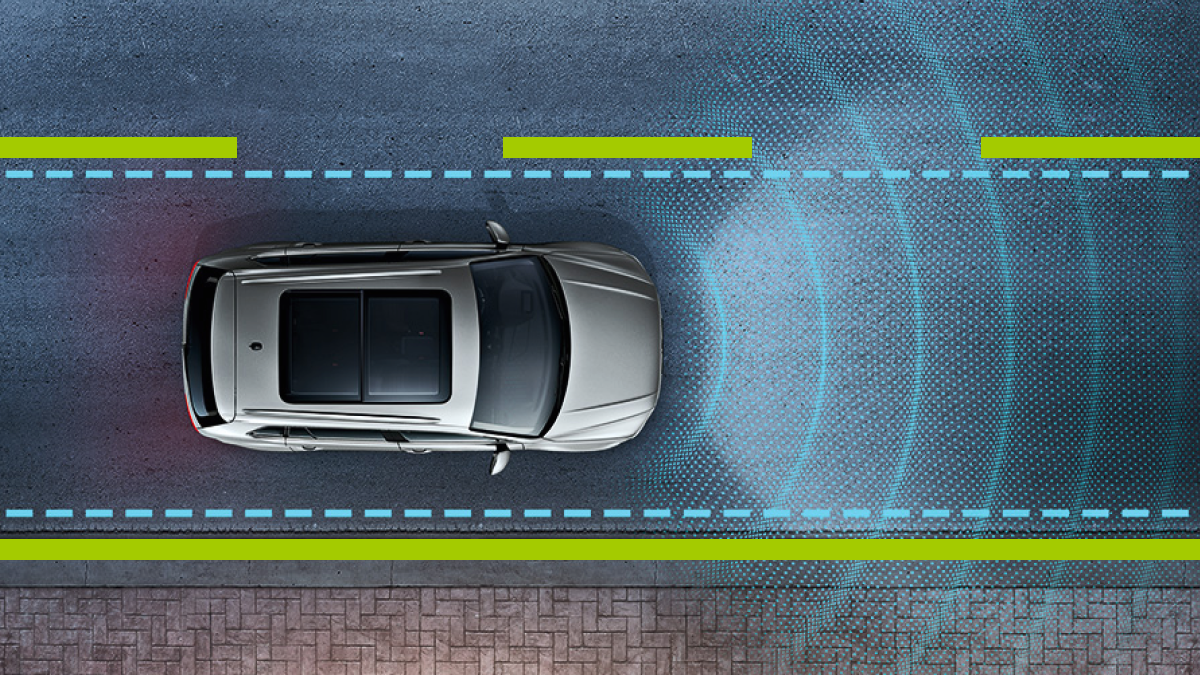

Lane Keep Assist

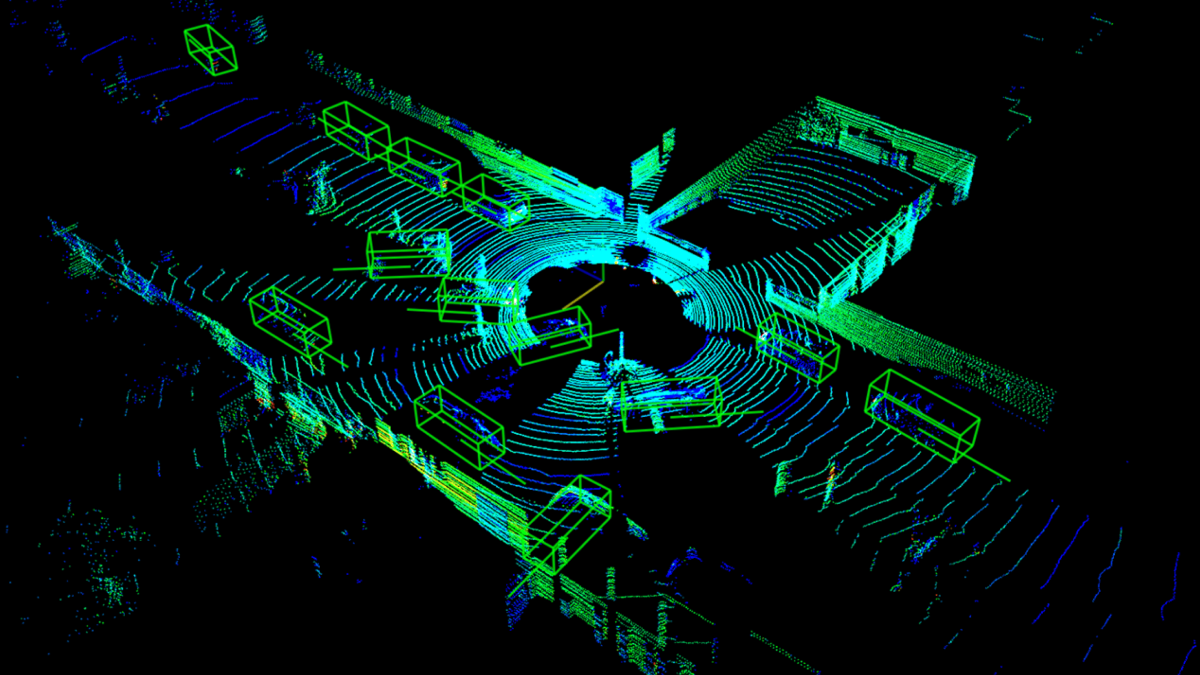

This is one of the most popular types of ADAS technologies, where the AI system is responsible for ensuring that a driver does not unintentionally drift out of their lane. Traditional lane-keeping assistance systems locate the vehicle in relation to lane lines using a camera or sensors. It will either warn the driver if the car is drifting or utilize steering correction and selective braking to gently shove it back into its lane. In addition to cameras, LiDARs are also used to allow the system to help the vehicle better understand its position on the road. LIDAR can be used to create a dynamic 3D map of an environment. Raw clustering point data from the sensor is applied to two algorithm steps, segmentation, and classification.

Traffic Sign Recognition

One of the most significant vehicle-based intelligent systems for detecting and interpreting road signals is traffic sign recognition technology. In order to recognize traffic signs, show and alert the driver of their content, in the case of ADAS entails fixing a vehicle-mounted camera. Five steps make up the training process: image acquisition, preprocessing, detection, classification, and outcome.

In fact, the initial phase entails collecting photos from cameras mounted on a vehicle. The second phase involves cleaning and preparing these photos for additional investigation. Operations like noise removal, image scaling, and image distortion correction fall under this category. The system then locates road signs in the preprocessed photos using algorithms for traffic sign detection. The system pays great attention to determining the precise class to which the detected traffic sign belongs in the fourth stage. After analyzing the data, the system comes to a decision that is either communicated to the driver or sent to another part of the car.

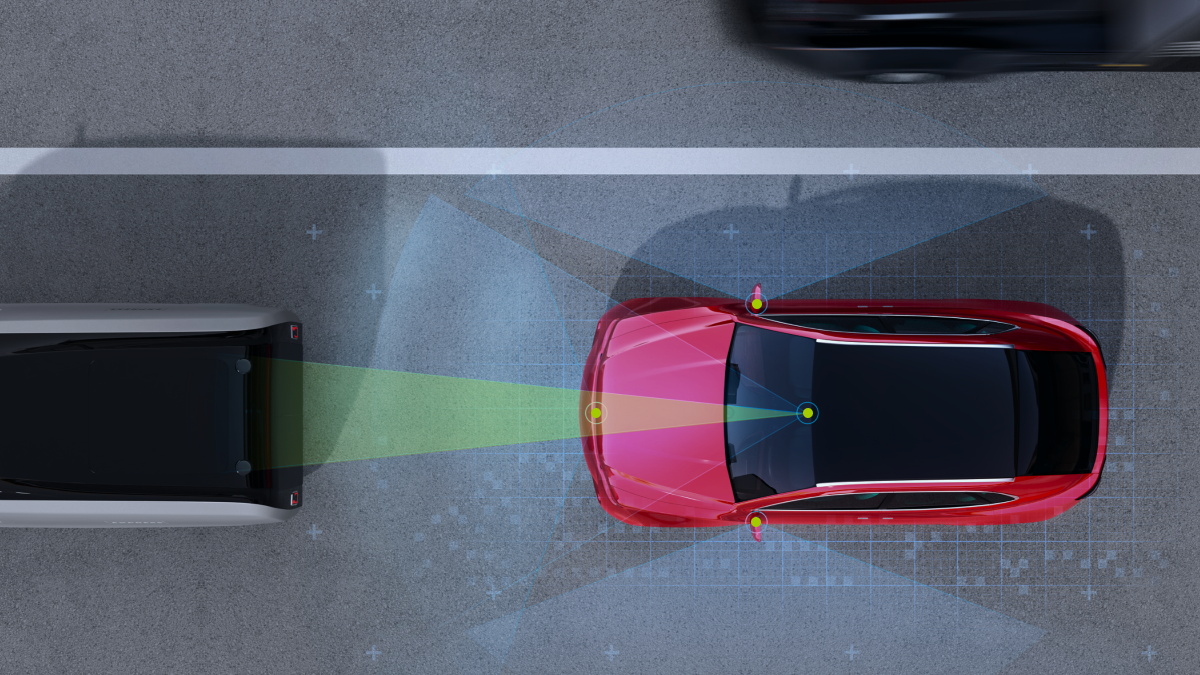

Collision Avoidance Systems

A collision avoidance system is a type of safety device used to alert, warn, or help drivers avoid impending crashes and lower the likelihood of accidents. Many different technologies and sensors, including radar, lasers, cameras, and artificial intelligence, are used by collision avoidance systems. Some warn or alarm, while others overrule the driver to help them avoid collisions and reduce danger. Not all collision avoidance systems are made equally, but these systems warn the driver; these devices may use visual, aural, or haptic (producing a touch-based reaction) warnings. These devices function differently because some use AI vision technology, while others have dash cams that take pictures and use GPS to pinpoint their location.

What Types of Data Annotation are Required to Create These Technologies?

One of the most popular tools in the development of ADAS technologies is LiDAR, which creates a 3D Point Cloud. These 3D Point Clouds need to be annotated with techniques like polylines which allow the AI system to not only identify all the various road markings but also distinguish between each of them. Semantic segmentation is also necessary, which is a computer vision task in which the goal is to categorize each pixel in an image into a class or object. The goal is to produce a dense pixel-wise segmentation map of an image, where each pixel is assigned to a specific class or object.

Computer vision cameras are also used to train AI systems. They require data annotation methods like image classification, which is a type of image annotation that looks for the presence of comparable objects shown in pictures over a whole dataset. It is used to teach a computer to identify an object in an unlabeled image that resembles an object in other labeled photos that you fed it during training. Object recognition is also necessary, which aims to reliably detect the presence, location, and number of one or more items in an image. It can also be used to pinpoint a specific object. You may teach a machine learning model to recognize the items in unlabeled photographs on its own by repeating this technique with various images.

Trust Mindy Support With All of Your Data Annotation Needs

Mindy Support is a global provider of data annotation services and is trusted by Fortune 500 and GAFAM companies. With more than ten years of experience under our belt and offices and representatives in Cyprus, Poland, Romania, The Netherlands, India, OAE, and Ukraine, Mindy Support’s team now stands strong with 2000+ professionals helping companies with their most advanced data annotation challenges.