Help Your Employees Relieve Stress With These AI Pets

With the COVID pandemic lingering on, people are looking for new ways of coping with self-isolation and the loneliness it is causing. Fortunately, many companies out there have leveraged AI to create pets that help us relieve a lot of pent-up stress. Let’s take a closer look at these AI animals to see why they are becoming more and more popular and the data annotation that was required to create them.

What are Some of the AI-Powered Pets Out There?

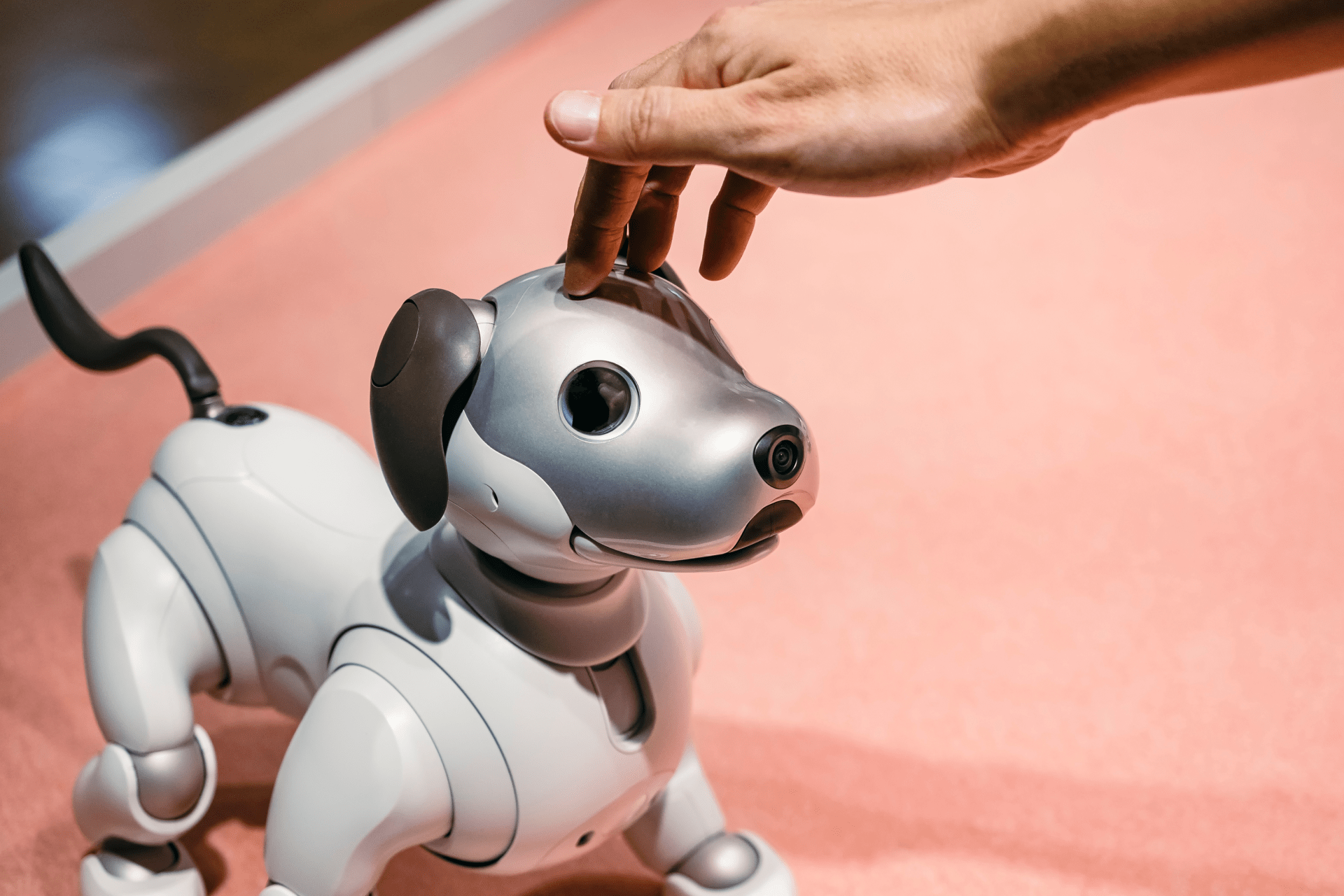

Let’s start with Aibo, which is an AI-powered robotic dog that can shape its personality to the whims and habits of its owner, building the kind of intense emotional attachments usually associated with kids, or beloved pets. It has a camera-embedded snout and sensor-packed paws to navigate and interact with the physical world and “read” the emotions and habits of its owner.

Another interesting “pet” is Qoobo, a therapeutic robot in the form of a cushion with a tail. It leverages the most pleasing parts of a pet — a fluffy torso, and a wagging tail When caressed, it waves gently. When rubbed, it swings playfully. And, it occasionally wags just to say hello. It’s comforting communication that warms your heart the way animals do. Qoobo sold more than 30,000 units by September 2021, many stressed-out users working from home under COVID restrictions.

Finally, there is the Lovot robot, which is an emotional robot programmed to autonomously navigate its surroundings, remember its owners, and respond to hugs and other affection, gazing out with its oversized, quivering, high-resolution eyes. It may be worthwhile for schools and parents to look into this robot to help take some of the stress off their children.

How are Such AI Pets Created?

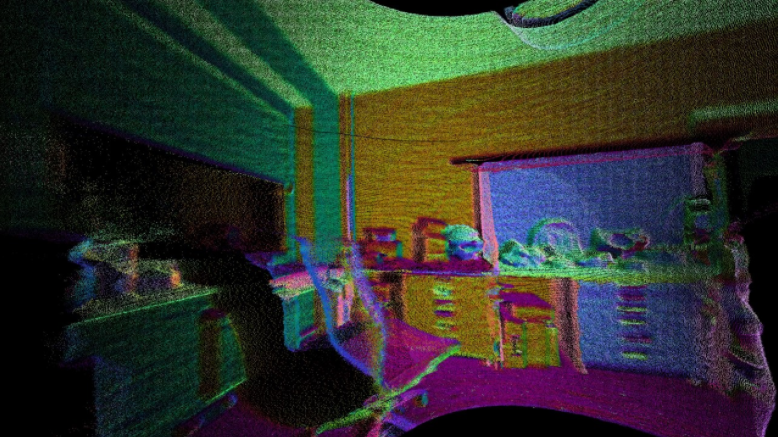

Many different types of data annotation are required to create the products we talked about in the previous section. For example, if the Lovot robot is capable of navigating its surroundings, this means that 3D Point Cloud annotation was required to train the machine learning algorithms to learn and detect the various items it could encounter. To better understand 3D Point Cloud annotation, let’s explore the image below:

As we can see, the image is color-coded in terms of its distance from the LiDAR. So the objects that are closer will be colored violet or blue since these colors have a short wavelength and object further away will be green, orange, etc. All of the images in the 3D point cloud need to be annotated with labeling, semantic segmentation, and 2D/3D boxes to allow the system to recognize all of the objects.

Another “pet” we talked about, Aibo, needs to also recognize the habits and emotions of its owner, in addition to navigating its surroundings. This means that it needs to detect, for example, facial expressions, hand gestures, etc. Therefore, landmark annotation would be required so the robot can detect eyebrows, nose, eyes, and other facial features and video annotation would be necessary, event tracking to be more specific, to understand the hand gestures.

Trust Mindy Support With All of Your Data Annotation Needs

If you are creating an AI product that requires large volumes of data annotation, consider outsourcing such work to Mindy Support. We are one of the leading European vendors for data annotation and business process outsourcing, trusted by several Fortune 500 and GAFAM companies, as well as innovative startups. With 9 years of experience under our belt and 10 locations in Cyprus, Poland, Ukraine, and other geographies globally, Mindy Support’s team now stands strong with 2000+ professionals helping companies with their most advanced data annotation challenges. Contact us to learn more about what we can do for you.