Computer Vision vs LiDAR vs Radar: What’s the Difference?

Autonomous vehicles and AI-powered robots rely on such major technologies to navigate their surroundings: computer vision, LiDAR and radar. While computer vision, LiDAR and radar may be used to accomplish the same purpose, they are different technologies offering their own benefits. In this article, we will take a look at the difference between computer vision, LiDAR, radar and the data annotation that needs to be done to train AI systems.

What is Computer Vision?

Computer vision is a field of artificial intelligence (AI) that enables computers and systems to derive meaningful information from digital images, videos, and other visual inputs — and take actions or make recommendations based on that information. If AI enables computers to think, computer vision enables them to see, observe and understand. Basically, computer vision works the same way as human vision, except that our vision has been trained over the course of our lives on how to tell objects apart, how far away they are, whether they are moving, and whether there is something wrong in an image.

Computer vision does not have this advantage, which is why it requires a lot of annotated training datasets to understand the physical world. It runs analyses of data over and over until it discerns distinctions and ultimately recognizes images. For example, we recently helped one of our clients train a computer vision system to recognize car damage, and we needed to annotate more than 36,000 images to train their computer vision system. You can read more about our work in our case study.

Computer vision technology is a rapidly growing field and is actively being used in the automotive, agricultural, construction, security, and other industries.

What is LiDAR?

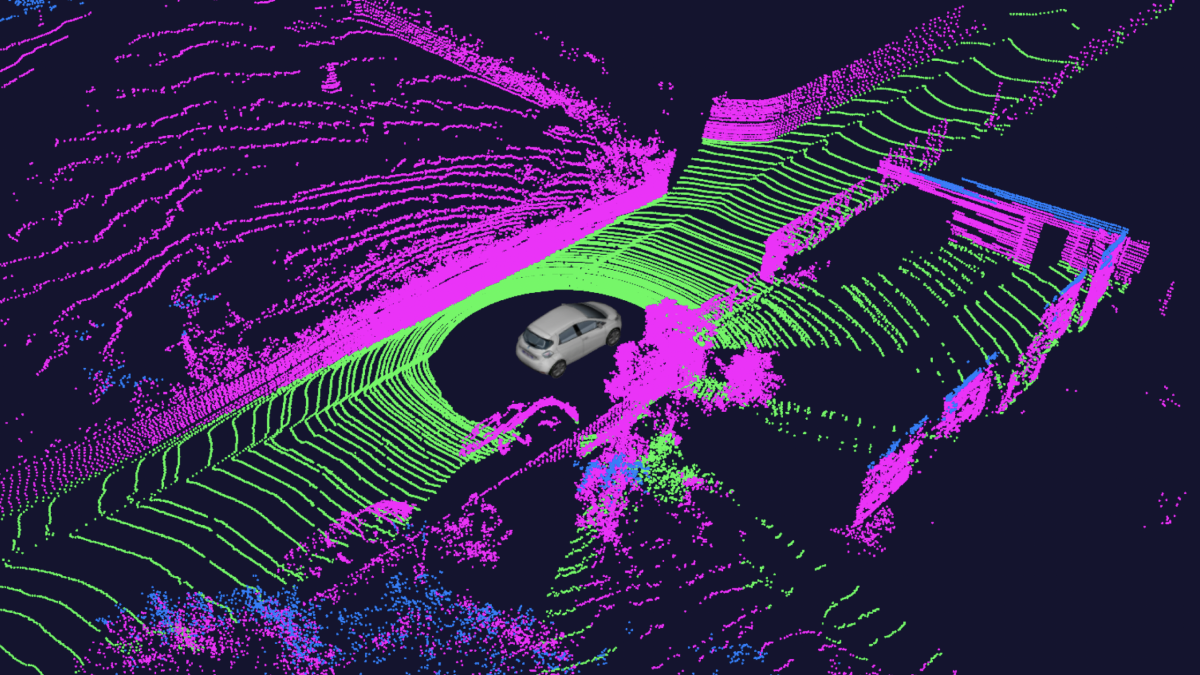

LiDAR, which stands for Light Detection and Ranging, is a remote sensing method that uses light in the form of a pulsed laser to measure ranges (variable distances) between it and other objects. These pulses bounce off surrounding objects and return to the sensor, which then uses the time it took for each pulse to return to the sensor to calculate the distance it traveled. Repeating this process millions of times per second creates a precise, real-time 3D map of the environment. This map is called a 3D point cloud. An onboard computer can utilize the lidar point cloud for safe navigation.

A Point Cloud is a 3D visualization made up of thousands or even millions of georeferenced points. Point clouds provide high-resolution data without the distortion sometimes present in 3D mesh models and are commonly used in industry-standard software. LiDARs and 3D Point Clouds are actively used in the automotive, agricultural, architecture, digital design, and many others.

What is Radar?

Radar is a system that uses radio waves to determine and map the location, direction, and/or speed of both moving and fixed objects such as cars, aircraft, ships and many other things. A transmitter emits radio waves, which are reflected by the target and detected by a receiver, typically in the same location as the transmitter. Although the radio signal returned is usually very weak, radio signals can easily be amplified, so radar can detect objects at ranges where other emissions, such as sound or visible light, would be too weak to detect.

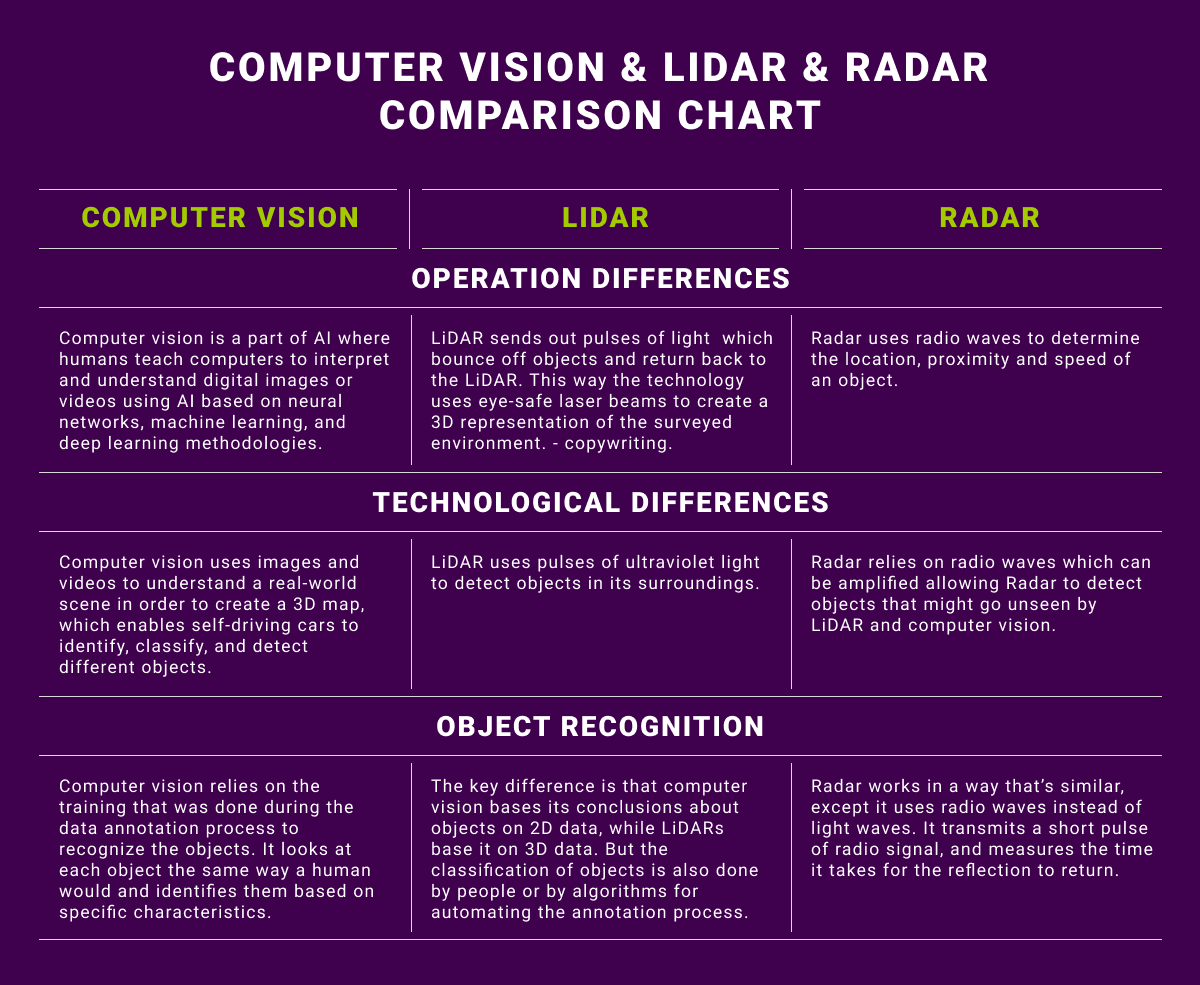

What’s the Difference Between Computer Vision, LiDAR and Radar?

The choice of using LiDAR computer vision or Radar will depend on the requirements and specifications of each project. The differences listed below should offer a high-level understanding of the differences:

Operation differences

Computer vision is a part of AI where humans teach computers to interpret and understand digital images or videos using AI based on neural networks, machine learning, and deep learning methodologies. LiDAR sends out pulses of light to sample the surface of the earth to produce highly accurate measurements. Radar uses radio waves to determine the location, proximity and speed of an object.

Technological differences

Computer vision uses images and videos to understand a real-world scene in order to create a 3D map, which enables self-driving cars to identify, classify, and detect different objects. LiDAR uses pulses of ultraviolet light to detect objects in its surroundings. Radar relies on radio waves which can be amplified allowing Radar to detect objects that might go unseen by LiDAR and computer vision.

Object recognition

LiDAR uses laser light that sends out millions of pulses per second, going and hitting objects and reflecting back, providing excellent range information. So, distance measurement and depth perception are better than what cameras can do. Radar works in a way that’s similar, except it uses radio waves instead of light waves. It transmits a short pulse of radio signal, and measures the time it takes for the reflection to return. Computer vision relies on the training that was done during the data annotation process to recognize the objects. It looks at each object the same way a human would and identifies them based on specific characteristics.

What Types of Data Annotation are Required for LiDAR and Computer Vision Powered AI?

The main technique used for LiDAR annotation is 3D bounding boxes (cuboids), which allows the system to better understand the location of any object in 3D space, its direction of movement (if they are dynamic objects), measuring dimensions, etc. In addition to this, as mentioned earlier, LiDAR produces a 3D Point Cloud that needs to be annotated with methods like polyline annotation, which allows autonomous vehicles to identify road markings and stay in their lanes. Semantic segmentation is also necessary, which involves classifying individual points of a 3D point cloud into pre-specified categories. This can be a challenging task because of high redundancy, uneven sampling density, and lack of explicit structure of point cloud data. The segmentation of point clouds into foreground and background is a fundamental step in processing 3D point clouds.

Data annotation for computer vision may range from simple techniques like 2D bounding boxes for object detection to more advanced methods like instance segmentation. This is where models learn to detect objects, identify each object’s location in the frame, and estimate the exact pixels of each object. These models can be useful if you need more precise pixel estimates for object interactions and higher accuracy.

It is also worth pointing out that modern data acquisition technologies combine 2D data collection (cameras) and LiDARS or Radars(sensors). These data sources are synchronized and during object detection, objects in 2D data are linked with 3D data. This means that the same object is marked on both 2D and 3D data, thus combining Computer vision technology with LiDAR/Radar.

Trust Mindy Support With All of Your Data Annotation Needs

Mindy Support is a global provider of data annotation services and is trusted by Fortune 500 and GAFAM companies. With more than 10 years of experience under our belt and offices and representatives in Cyprus, Poland, Romania, Bulgaria,, India, OAE, and Ukraine, Mindy Support’s team now stands strong with 2000+ professionals helping companies with their most advanced data annotation challenges.