Voices That Feel: Mindy Support’s Global Collection of 75,000 Emotion-Labeled Clips Across 13 Languages

Company Bio

-

Location: United States

-

Industry: Artificial Intelligence & Voice Technology (Enterprise, Consumer Electronics, Automotive)

-

Company Size: 500–1,000 employees

Client Profile

Our client is a U.S.-based deep tech company pioneering AI-driven voice technologies for enterprise, consumer electronics, and automotive industries. Their platform powers interactive voice agents, embedded systems, and multimodal interfaces used by millions globally.

Their vision: build machines that communicate not just intelligently, but intuitively – systems that can modulate responses based on the speaker’s emotional context.

Services Provided

Data Collection, Emotion Annotation & Sentiment Structuring, Quality Control

Project Overview

Our client is a U.S.-based deep tech company pioneering AI-driven voice technologies for enterprise, consumer electronics, and automotive industries. Their platform powers interactive voice agents, embedded systems, and multimodal interfaces used by millions globally.

Their vision: build machines that communicate not just intelligently, but intuitively – systems that can modulate responses based on the speaker’s emotional context.

Business Problem

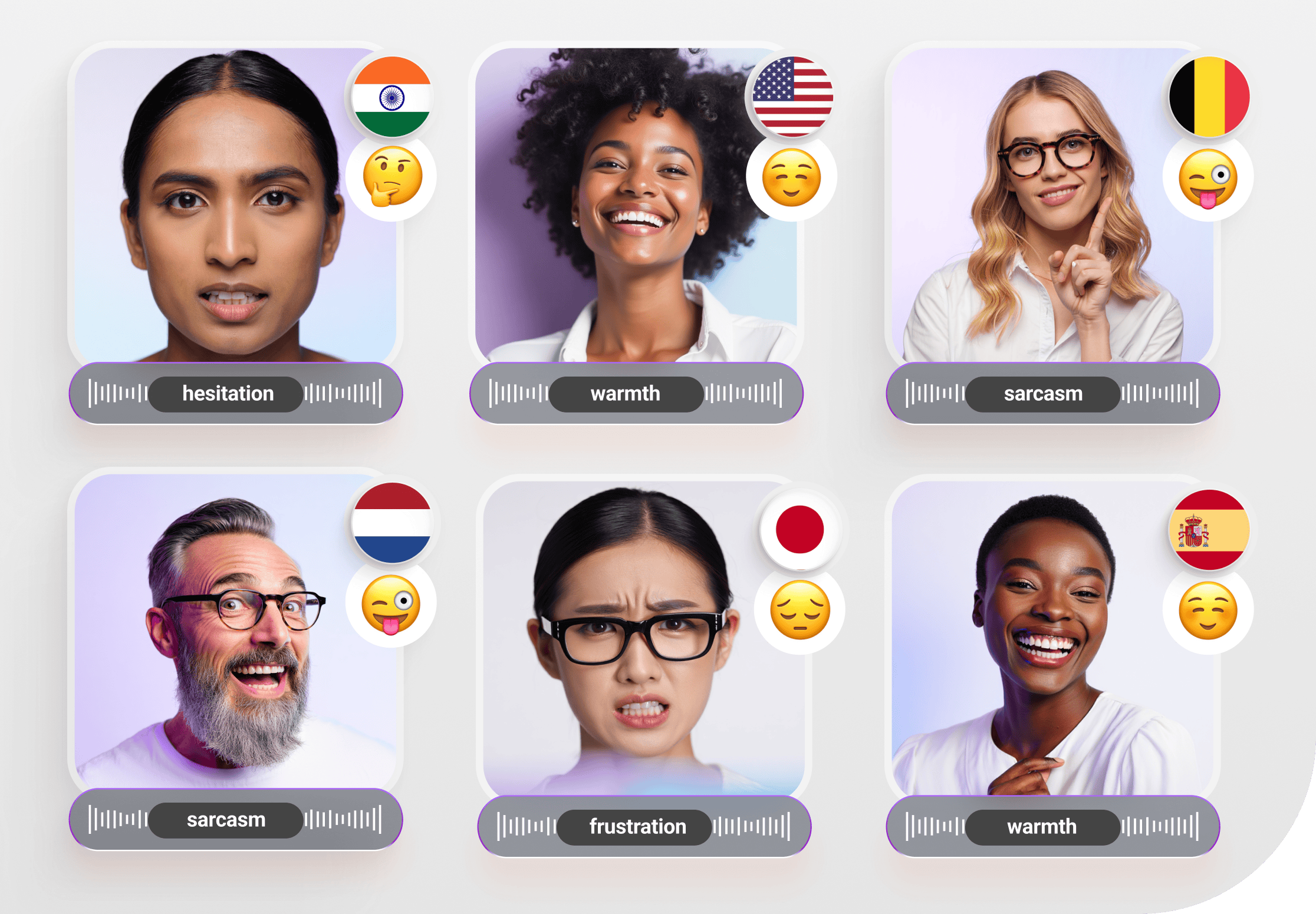

Off-the-shelf datasets didn’t cut it. Their in-house data was too clean, too flat, and emotionally narrow. For a model designed to understand subtle vocal dynamics – like hesitation, sarcasm, frustration, or warmth – they needed:

- Emotionally expressive, real-world voice samples

- Multilingual coverage (12+ languages)

- Demographically diverse speakers to model acoustic variance

- Labeling beyond basic emotion: intensity, tone shift, and context

- Full compliance with data ethics, privacy laws, and security protocols

And they needed it fast – without compromising data integrity or model performance.

Why Mindy Support

We were chosen because we specialize in building high-impact datasets for complex AI tasks. For this project, the client needed scale, specificity, and structure – and that’s exactly what we delivered.

They valued our:

- Global voice data infrastructure, with speaker sourcing and verification in over 30 countries

- Experience in acoustic emotion annotation using both subjective human judgment and objective acoustic markers

- Robust data governance framework (GDPR, CCPA, SOC 2 workflows)

- Agile delivery process with real-time feedback loops to evolve labeling schemas in sync with model needs

Services Delivered to the Client

We didn’t just build a dataset – we engineered an entire emotional intelligence pipeline from the ground up.

It began with Participant Sourcing & Prompt Engineering. Our team tapped into a global network, recruiting over 5,000 verified speakers from more than 12 countries. We crafted emotionally rich scenarios — moments of frustration, joy, interruptions, and requests – mirroring the unpredictability of real-world conversations. Every demographic detail was balanced: gender, age, accent, and even the type of device or environment.

Next came Audio Collection & Processing. Recordings flowed in from every context imaginable – mobile phones on noisy streets, desktop calls, in-car voice commands, and quiet smart home interactions. Using internal QA scripts, we controlled for signal-to-noise ratio, background interference, and microphone type. The prompts encouraged spontaneous, natural speech, making every clip feel authentic.

Then we moved to Emotion Annotation & Sentiment Structuring. Every audio snippet was meticulously tagged – from primary and secondary emotions to intensity levels (1–5) and sentiment polarity. Our trained linguists and affective computing specialists validated each annotation, all aligned to ISO/TR 13066 standards.

Finally, Quality Control & Dataset Packaging. We ran multi-pass QA checks for phonetic precision, acoustic clarity, and annotation consistency. The final delivery came in JSON, CSV, and FLAC formats – complete with emotion tags, anonymized speaker profiles, recording conditions, and timestamps – ready for direct integration into the client’s machine learning pipeline.

What we created wasn’t just data – it was a living, breathing reflection of human emotion, designed for machines to understand.

Key Results

1/ Dataset Volume:

75,000+ audio clips | 1.2 TB processed data

2/ Language Coverage:

13 languages/dialects — including English, Spanish, German, Japanese, Hindi, and Arabic

3/ Annotation Precision:

98.7% inter-rater agreement (Cohen’s κ = 0.84+)

4/ Integration Speed:

Automated data formatting and ingestion pipeline cut model training prep time by 60%

5/ Model Performance:

Client’s emotion recognition model improved precision by 32% in A/B tests

Also reduced false-positive response triggers by 27%

GET A QUOTE FOR YOUR PROJECT

We have a minimum threshold for starting any new project, which is 735 productive man-hours a month (equivalent to 5 graphic annotators working on the task monthly).