Annotating Keypoints on Human Hands in 2D Images and 3D Projections

Client Profile

Industry: IT

Location: USA

Size: 11-50

Company Bio

The client is defining a new era of personalised interactivity to smart, virtual and augmented interfaces. Their team brings research and innovation to market, partnering with some of the largest OEMs and industry leaders to commercialize new interactivity and valuable solutions as one of the top software providers.

Why is There a Need for AI Systems to Recognize Hand Gestures?

As AI technology continues to develop, the way we interact with it will also evolve since the AI systems will be able to understand more communication methods. For example, right now smart devices, like Siri and Alexa, can understand human speech and it is also possible to manually input a command. However, what if you wanted Alexa to stop playing music by simply raising your hand? What if the smart devices of the future only have a virtual interface?

Our client was working on a solution that would allow OEMs and industry leaders to produce products that offered such functionality.

Overview

The client offers platform agnostic hand tracking and gesture recognition solutions through computer vision, machine learning and advanced cognitive science. They needed data annotation services to enable hand interactions with virtual content, smart devices and digital interfaces.

Business Challenge

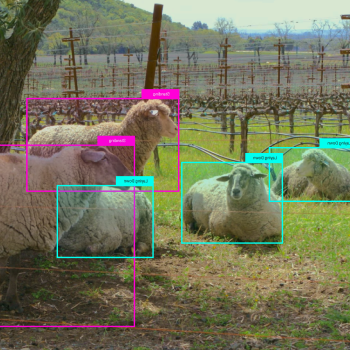

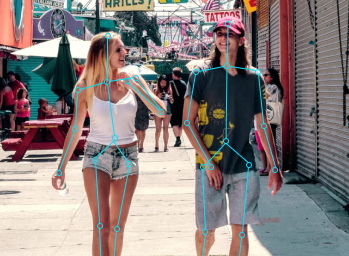

Since the client is using computer vision to allow AI technology to recognize human hand gestures, they needed to annotate many different images and hand positions in 2D images and 3D projections. This would be used as training data for machine learning algorithms. What made this project difficult is that the hands could be in different positions, and also partially covered, by the arms or sleeves. In some positions, it was very difficult to determine the positions of the points and which requires a correct 3-dimensional understanding of how to determine the points.

Solutions Provided by Mindy Support

Mindy Support assembled a team of annotators and conducted a specialized training for them to make sure they understand the keypoints on the hands and how they need to be annotated.

The project consisted of two stages. First, we annotated keypoints of the hands in 2D images; and then annotation of keypoints of the hands on a 3D model with parallel alignment of all points on all images of the series of images and the 3D model. An additional stage for checking the quality of annotations was done after the work was completed.

In total we annotated 20,000 images (18,000 2D models and 2,000 3D projections). All of the work was done in seven months.

Results

GET A QUOTE FOR YOUR PROJECT

We have a minimum threshold for starting any new project, which is 735 productive man-hours a month (equivalent to 5 graphic annotators working on the task monthly).