Why Data Annotation Software Still Needs a Human Touch

In an era where artificial intelligence and machine learning are transforming industries, the role of AI data annotation software has become increasingly pivotal. These tools automate the process of labeling data, enabling faster and more efficient algorithm training. However, despite their advancements, the nuanced understanding and contextual awareness that only humans can provide remain essential. This article delves into why human involvement in data annotation is not just beneficial but necessary for achieving accuracy, addressing biases, and ensuring the quality of AI models. By exploring the limitations of automated systems and the irreplaceable value of human insight, we uncover the critical balance needed for successful data-driven initiatives.

Introduction to Data Annotation AI

Data annotation is a foundational step in the development of artificial intelligence and machine learning systems, serving as the process of labeling or tagging data to make it understandable for algorithms. This involves categorizing various types of data—such as images, text, and audio—so that machines can learn to recognize patterns and make predictions. As AI technologies become more sophisticated, the need for high-quality annotated datasets has grown exponentially.Data annotation not only enhances the performance of AI models but also plays a crucial role in minimizing biases and improving the accuracy of outcomes. Understanding the intricacies of data annotation is essential for anyone looking to navigate the rapidly evolving landscape of AI, as it directly impacts the effectiveness and reliability of machine learning applications. This is especially true for human annotators.

Understanding Data Annotation: A Critical Component of AI

While data annotation may not be the most glamorous process, it certainly is one of the most important because it teaches the AI system to interact with the physical world. Just like when a baby is born, it learns to understand the world around them and later makes decisions about which actions to take based on the information they acquired. Well, the same is needed for an ML system since it needs all kinds of information about real-world objects to make the right decision.

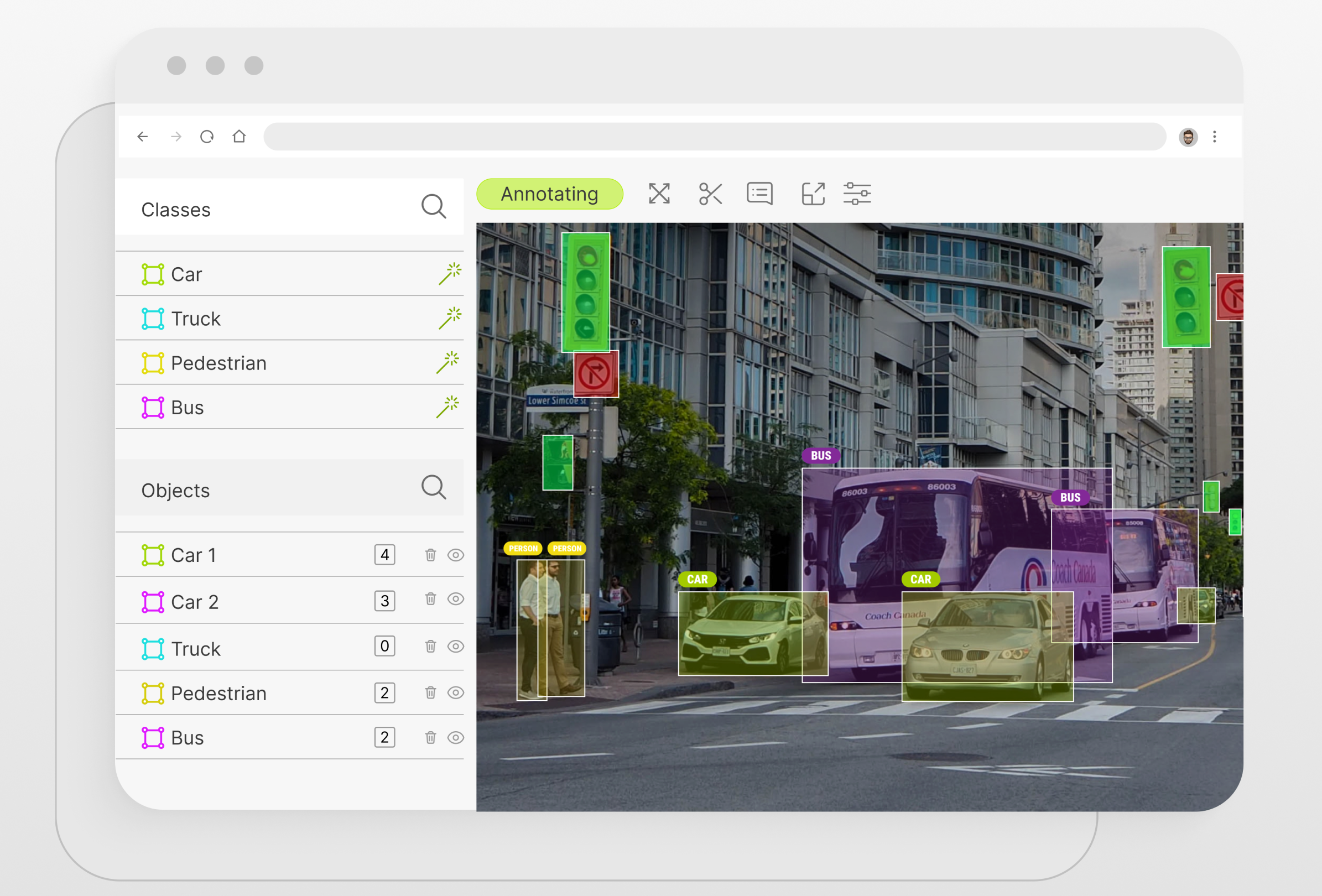

For example, imagine an autonomous vehicle that needs to make all kinds of driving decisions on the road. How will it know what all the road markings, street signs, traffic lights and other things encountered on the road mean? High quality data annotation allows them to comprehend the real world just like a human so autonomous vehicles and other AI products can make correct decisions when it matters most.

The Rise of AI Data Annotation Tools

As you might imagine, there are lots of data annotation legit tools out there for you to use depending on your project requirements. Your choice of tools will also depend on the industry in which you are working, such as financial annotation, the medical field, and other specialized areas. As a general rule, if your team has at least some experience using a particular product, it might be a good idea to go ahead with that tool simply to avoid having to spend time learning a completely new toolset. As an alternative, you can set up an offshore team that will have experience with your desired tool since they have access to a wide talent pool of candidates and are sure to find the right team for you.

Challenges of Relying Solely on AI for Data Annotation

While AI has made significant strides in automating data annotation, relying solely on these systems poses several challenges that can compromise the quality and reliability of the results. Understanding these limitations is crucial, as they highlight the necessity of incorporating human expertise to ensure accurate, unbiased, and contextually relevant annotations. Relying solely on AI for data annotation presents several significant challenges:

- Contextual Understanding – AI may struggle to grasp nuanced context or subtleties in data, leading to misinterpretations. For example, sarcasm in text or ambiguous images can be difficult for algorithms to accurately label.

- Bias and Fairness – AI systems can perpetuate or even amplify existing biases present in training data. Without human oversight, these biases can result in skewed annotations, adversely affecting model performance and fairness.

- Complexity of Data – Certain datasets, especially those with intricate or multifaceted characteristics (like medical images or legal documents), may require specialized knowledge that AI lacks. This complexity often necessitates human expertise for accurate annotation.

- Adaptability – AI models may struggle to adapt to new or evolving data types without retraining. Human annotators can apply judgment and adjust to changing contexts more fluidly.

- Quality Assurance – While AI can process data quickly, it may produce lower-quality annotations that require extensive review. Human oversight is crucial for ensuring accuracy and consistency.

Why Human Annotators are Still Essential

Despite advancements in machine learning algorithms, there are still numerous instances where human judgment is irreplaceable. Complex tasks such as fine-grained object detection, understanding the context in natural language processing, or interpreting medical images often require a level of nuance and understanding that machines struggle to replicate. Humans can identify subtle patterns, anomalies, and inconsistencies that might escape automated systems, leading to more accurate and reliable annotations.

There are also considerations that need to be made for project-specific tasks. For example, if you are working on an AI speech recognition tool, then you need to be aware that fully automated speech recognition systems may have trouble reliably transcribing spoken words, especially in situations where accents, dialects, or other speech variances are present.Inaccuracies in the transcriptions and subsequent annotations may result from this. For autonomous vehicle projects, autonomous systems can misread or misclassify road situations in the context of autonomous driving, creating significant risks.

Financial Annotation: A Case for Human Expertise

Human data annotators are essential for financial data annotation due to the complexity and nuance inherent in financial documents and transactions. Unlike more straightforward data types, financial data often contains intricate details, contextual subtleties, and regulatory implications that require expert judgment. For instance, annotators must accurately interpret terms and conditions, identify fraud indicators, and differentiate between various financial instruments. Additionally, the rapidly changing landscape of financial regulations and market dynamics necessitates a level of adaptability and critical thinking that AI alone cannot provide. By leveraging human expertise, organizations can ensure more precise annotations, ultimately leading to more robust and reliable AI models capable of making informed financial decisions.

Assessing the Time and Quality of Data Annotation

All projects are unique and will, therefore, have different timeframe requirements. Also, how long a data annotation assessment will take to determine whether a particular team member would be a good fit or how long a pilot project may take would also depend on many different factors. Basically, what you want to look for is the correlation between time and quality.

In a perfect world, the vendor would have completed the entire data annotation project within a span of a day or two with complete accuracy. However, this is not realistic. Instead, you should consider your own internal requirements for how long it would be reasonable to get the annotation work done and the level of accuracy you need. Be sure to discuss all of this with the vendor before deciding on the project’s implementation.

Blending AI Tools with Human Insights

Blending AI tools with human insights creates a powerful synergy that enhances the effectiveness of data annotation and overall decision-making processes. While AI excels at processing vast amounts of data quickly and identifying patterns, human annotators bring contextual understanding, critical thinking, and domain-specific knowledge to the table. This collaboration allows for the identification of nuanced details that AI might overlook, such as cultural references in text or subtle trends in financial data. Moreover, human oversight can help mitigate biases inherent in AI algorithms, ensuring a more equitable and accurate output. By combining the efficiency of AI with the depth of human insight, organizations can achieve higher-quality results, driving innovation and informed decision-making across various fields.

Future Trends in Data Annotation: The Human-AI Collaboration

The future of data annotation is increasingly shaped by the collaboration between humans and AI, leading to several emerging trends

- Enhanced Hybrid Annotation Models: Combining the strengths of AI and human annotators will become more prevalent. AI will handle routine tasks, while humans will focus on complex annotations, allowing for increased efficiency and accuracy.

- Interactive Annotation Tools: Future annotation platforms will likely incorporate interactive features that enable annotators to provide real-time feedback to AI systems, helping to refine and improve model performance continuously.

- Crowdsourced Annotations: Crowdsourcing will play a larger role, utilizing diverse human insights to enrich datasets and reduce biases. This trend will harness the collective intelligence of a broader population, enhancing data diversity.

- AI-Assisted Decision Making: AI tools will assist human annotators by suggesting potential labels or highlighting areas of interest, enabling faster and more accurate decision-making while retaining human judgment.

- Domain-Specific Expertise: As industries evolve, there will be a growing demand for domain-specific annotators who can provide specialized knowledge in areas such as healthcare, finance, and legal fields, ensuring that annotations meet industry standards.

Conclusion

While data annotation software has revolutionized the way we label and organize vast amounts of information, it is clear that the human touch remains indispensable in this process. The nuanced understanding, contextual awareness, and ethical considerations that human annotators bring are vital for producing high-quality, unbiased datasets that drive effective AI models. As we move forward in an increasingly data-driven world, fostering collaboration between AI tools and human expertise will be crucial to navigating complexities and ensuring that our technologies reflect the diverse and dynamic nature of real-world scenarios. Embracing this hybrid approach will not only enhance the accuracy of AI applications but also build a more responsible and equitable future in artificial intelligence.

Trust Mindy Support With All of Your Data Annotation Needs

Mindy Support is a global provider of data annotation services and is trusted by Fortune 500 and GAFAM companies. With more than ten years of experience under our belt and offices and representatives in Cyprus, Ukraine, Poland, Bulgaria, Philippines, India, and Egypt, Mindy Support’s team now stands strong with 2000+ professionals helping companies with their most advanced data annotation challenges.