What’s Better for Your Autonomous Vehicle Project: LiDAR or Radar?

As companies advance towards fully autonomous vehicles, the debate over which technology is most appropriate for guiding these self-driving cars has grown louder. LiDAR sensors and Radar systems are two of the top competitors in this field. Determining which technology is better for autonomous vehicle navigation is a difficult undertaking that calls for a detailed analysis of their capabilities and limitations. Both systems have unique strengths and shortcomings.

In this article, we will take a look at the capabilities of both LiDAR and Radar, as well as the data annotation needed to train AI systems.

LiDAR vs. Radar: How Do They Compare?

LiDAR sensors make precise, high-resolution maps of the area around a vehicle by measuring distances using laser light. In recent years, this technology has become increasingly popular, with many industry insiders praising its improved accuracy and capacity to produce exact, three-dimensional photographs of objects in real-time. Because technology can pick up even the slightest details, like the texture of a road or the shape of a pedestrian, LiDAR has become a popular choice among businesses creating autonomous vehicles.

Radar is also playing a role in car safety systems. It detects things and measures their size, velocity, and direction using radio waves. Although radar may not provide as much detail as LiDAR, it still has a number of advantages that make it a great candidate for navigation in autonomous vehicles. One reason is that, in contrast to LiDAR, Radar is less impacted by weather conditions like fog, rain, or snow. Additionally, compared to their LiDAR equivalents, radar systems are typically more cheap and use less power.

For more information about the differences between LiDAR and Radar, please review our article.

What are the Challenges of Implementing Both LiDAR and Radar?

Despite LiDAR’s outstanding capabilities, a number of problems could prevent it from being widely used in the autonomous car sector. The cost of LiDAR technology is one of the biggest obstacles it faces. LiDAR sensors are still excessively expensive for many automakers, despite prices gradually falling over the past few years. This is especially true when contrasted with the comparably inexpensive Radar systems. This has prompted some businesses to look into different options, like employing numerous, less expensive LiDAR sensors to provide the same level of accuracy as a single, high-end unit.

The vulnerability of LiDAR technology to interference from other light sources is another possible problem. It’s not impossible to envision a scenario in which several LiDAR-equipped cars are driving in close proximity, with their lasers perhaps interfering with one another, in a world where autonomous vehicles are the norm. It can become too “noisy” for the sensors to accurately map their surroundings as a result of this.

Radar systems also have their fair share of difficulties. Although they are less influenced by ambient variables, they sometimes have trouble telling apart objects that are stationary from ones that are moving slowly. In some circumstances, such as while navigating through a packed parking lot or approaching a stopped car at a crossroads, this can make it challenging for a Radar-equipped vehicle to effectively assess its surroundings.

Do You Need to Choose Between One or the Other?

The debate about which is better, LiDAR or Radar, for autonomous car navigation is likely to rage for a while. Each technique has its own set of advantages and disadvantages. Therefore, it’s feasible that a combination of the two technologies would be the best way to guarantee the successful and safe functioning of self-driving automobiles. It will be intriguing to see how these two technologies continue to influence the development of autonomous car navigation as the market develops, and new innovations are created.

What Types of Data Annotation Are Necessary for LiDAR and Radar?

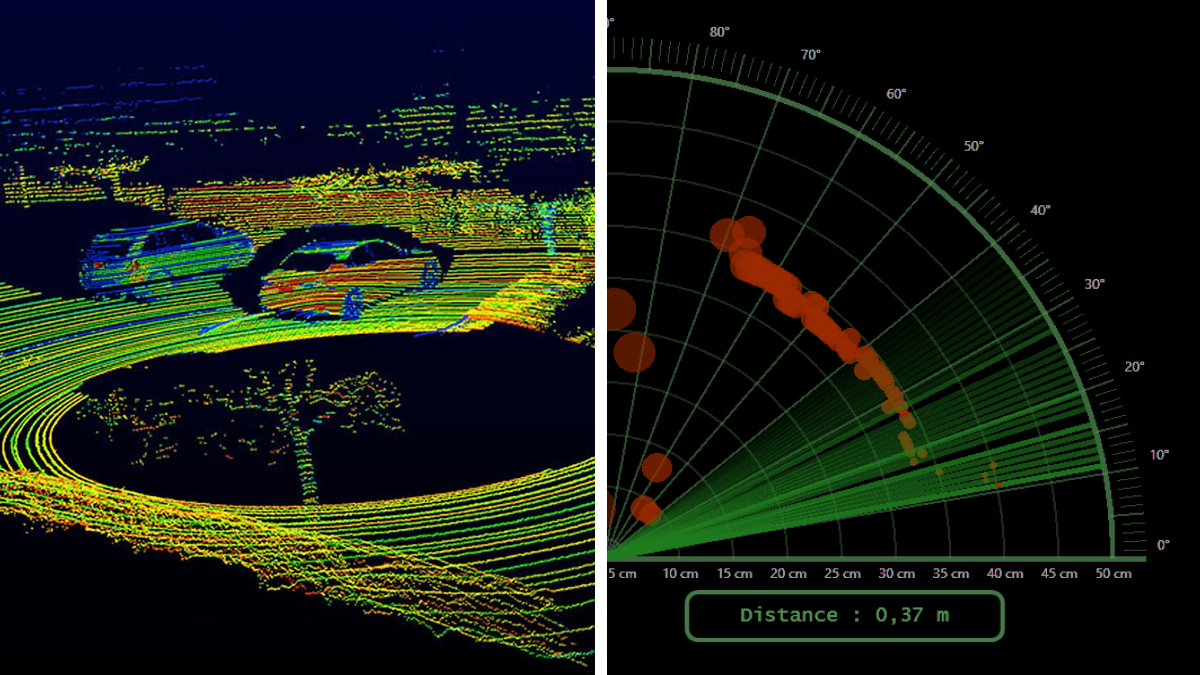

LiDARs create a 3D Point Cloud, which is a digital representation of how the AI system sees the physical world. These 3D Point Clouds need to be annotated with methods ranging from cuboids and polygons to more advanced methods like polygons. The latter provides the system with ground truth annotation of curbs, road pavement, and lane markers. Semantic segmentation may also be necessary, the goal of which is to identify and label different objects and parts within a 3D scene.

Radar images can also be annotated with the same methods as LiDAR images. However, if you decide to use both LiDAR and Radar in your project, you can combine both data sources and use multisensor annotation. This involves combining annotations from multiple sources, such as images, LiDAR, RADAR, or other sensors. By fusing data from different sensors, a more comprehensive and accurate understanding of the environment can be achieved.

Trust Mindy Support With All of Your Data Annotation Needs

Mindy Support is a global provider of data annotation services and is trusted by Fortune 500 and GAFAM companies. With more than ten years of experience under our belt and offices and representatives in Cyprus, Poland, Romania, The Netherlands, India, OAE, and Ukraine, Mindy Support’s team now stands strong with 2000+ professionals helping companies with their most advanced data annotation challenges.