Using Imaging Radar to Increase the Accuracy of ADAS Systems and Autonomous Vehicles

The automotive sector has long used radar. Even in the 1990s, vehicles have included radar for adaptive cruise control, and many current models have up to five radar sensors. While radar is an excellent tool for determining a vehicle’s distance from another and its rate of approach, current-generation sensors have appallingly poor resolution. Because of this, imaging radar is expected to grow significantly over the coming years.

In this article, we will take a look at imaging radar and its role in the development of AI, as well as the data annotation required to train AI systems that rely on imaging radar.

What is Imaging Radar?

Imaging radar, also known as high-definition or high-resolution radar, aims to close some of the technological gaps between LIDAR and current-generation radar while preserving the core benefits of radio frequency-based sensors. A radar is an active sensor that sends out a signal and listens for reflections, similar to a LiDAR. Since the sensor is the source and the signal’s speed is known, it is possible to measure the distance to the reflected item with great accuracy. Fog, rain, snow, and poor lighting do not interfere with radar signals.

Advanced architecture is used in some of the most cutting-edge radar imaging technologies to enable accurate object recognition and greater dynamic range. Examples include Massive MIMO (multiple-input, multiple-output) antenna design, high-end radio frequency design created in-house, and high-fidelity sampling. The imaging radar provides a detailed, four-dimensional vision of the environment up to 300 meters distant and beyond because of an integrated system-on-chip design that maximizes processing efficiency and world-leading algorithms for interpreting radar data. The radar allows for more precise detection of humans, cars, or impediments that other sensors would miss – even on busy urban streets – with a 140-degree field of view at medium range and a 170-degree field of view at close range.

How Can Radar Imaging Benefit the Automotive Sector?

One of the advantages imaging radars have over traditional radar systems is their ability to determine the height of objects on the vertical axis and, accordingly, classify them. This could help improve ADAS technologies like blind spot detection, passenger monitoring, and many other applications. Another factor to consider is that, generally, radar is less expensive than LiDAR, which could help make more AI technology available to a mass audience.

We also have to think about the effect of radar imaging on the development of self-driving cars in general. For highway driving, some of the most sophisticated radar imaging systems have a 350-meter sensing range, which is longer than most LiDAR solutions. Given the radar’s capacity to penetrate objects, it functions in the majority of weather conditions. It’s also vital to note that it can monitor instantaneous, precise speed at 0.2 m/s, which is crucial for determining a vehicle’s trajectory.

Imaging radar sensors are poised to excel in this space. Improving the price-performance ratio and better scalability, paralleled by technology advances—from multiple chipsets and sensors to single-chip solutions with simultaneous short, medium, and long-range detection capabilities—will boost adoption rates of imaging sensors while opening up the prospect of reduced sensor suite costs for ADAS and the autonomous vehicle market as a whole.

What Type of Data Annotation is Needed for Radar Imaging?

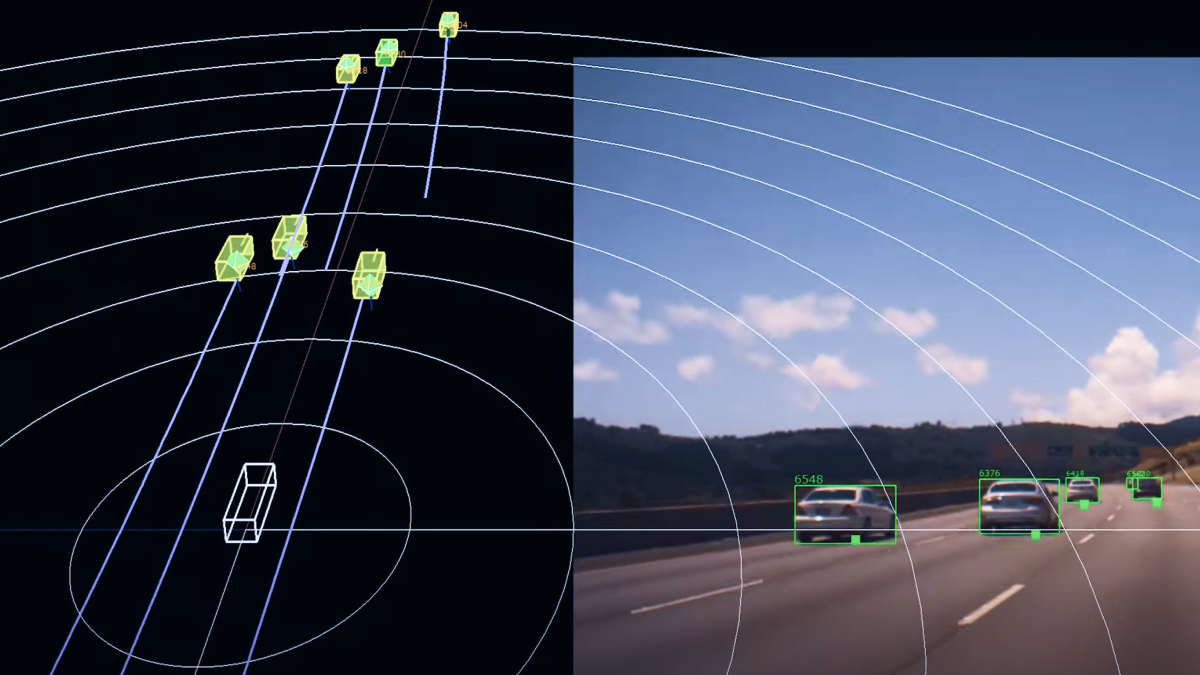

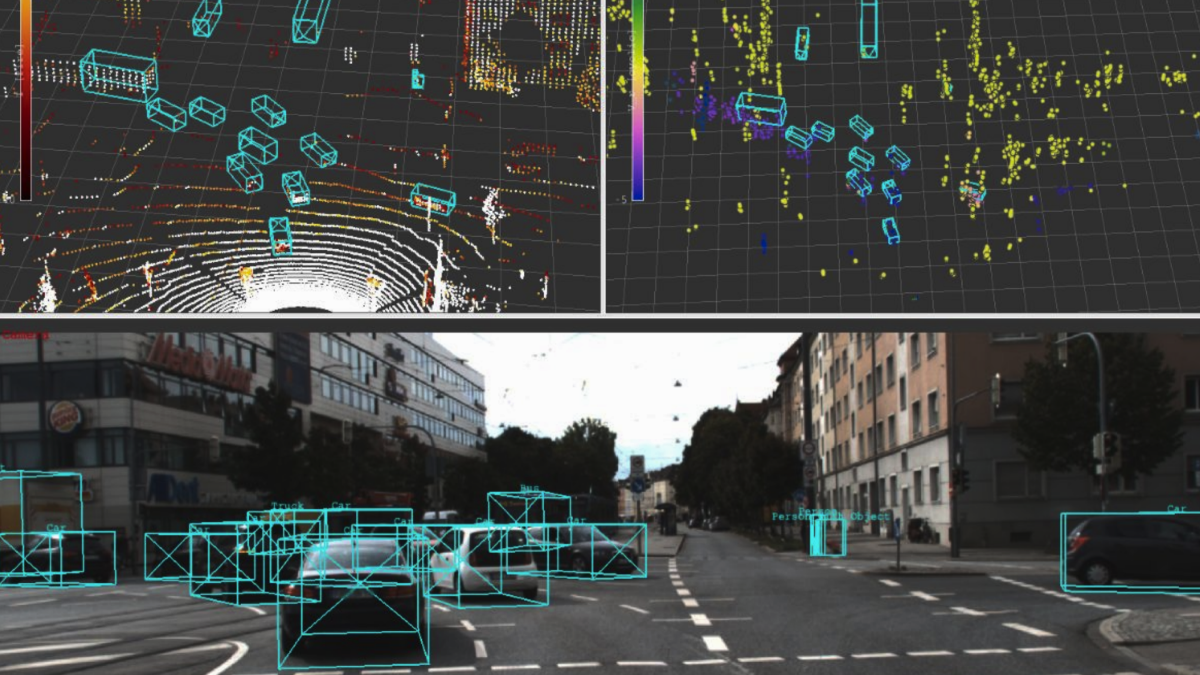

Radars create a 3D Point Cloud, which needs to be annotated with methods like cuboids to help identify objects like vehicles, vulnerable road users, traffic signs, traffic lights, etc. More advanced types of annotation are usually needed, like polyline annotation, to establish ground truth annotation of curbs, road pavement, and lane markers. Finally, a deep learning algorithm that assigns a label or category to each pixel in an image will require semantic segmentation. It is used to identify groups of pixels that represent various categories. An autonomous vehicle, for instance, needs to recognize other cars, pedestrians, traffic signs, pavement, and other elements of the road.

Trust Mindy Support With All of Your Data Annotation Needs

Mindy Support is a global provider of data annotation services and is trusted by Fortune 500 and GAFAM companies. With more than ten years of experience under our belt and offices and representatives in Cyprus, Poland, Romania, The Netherlands, India, OAE, and Ukraine, Mindy Support’s team now stands strong with 2000+ professionals helping companies with their most advanced data annotation challenges.