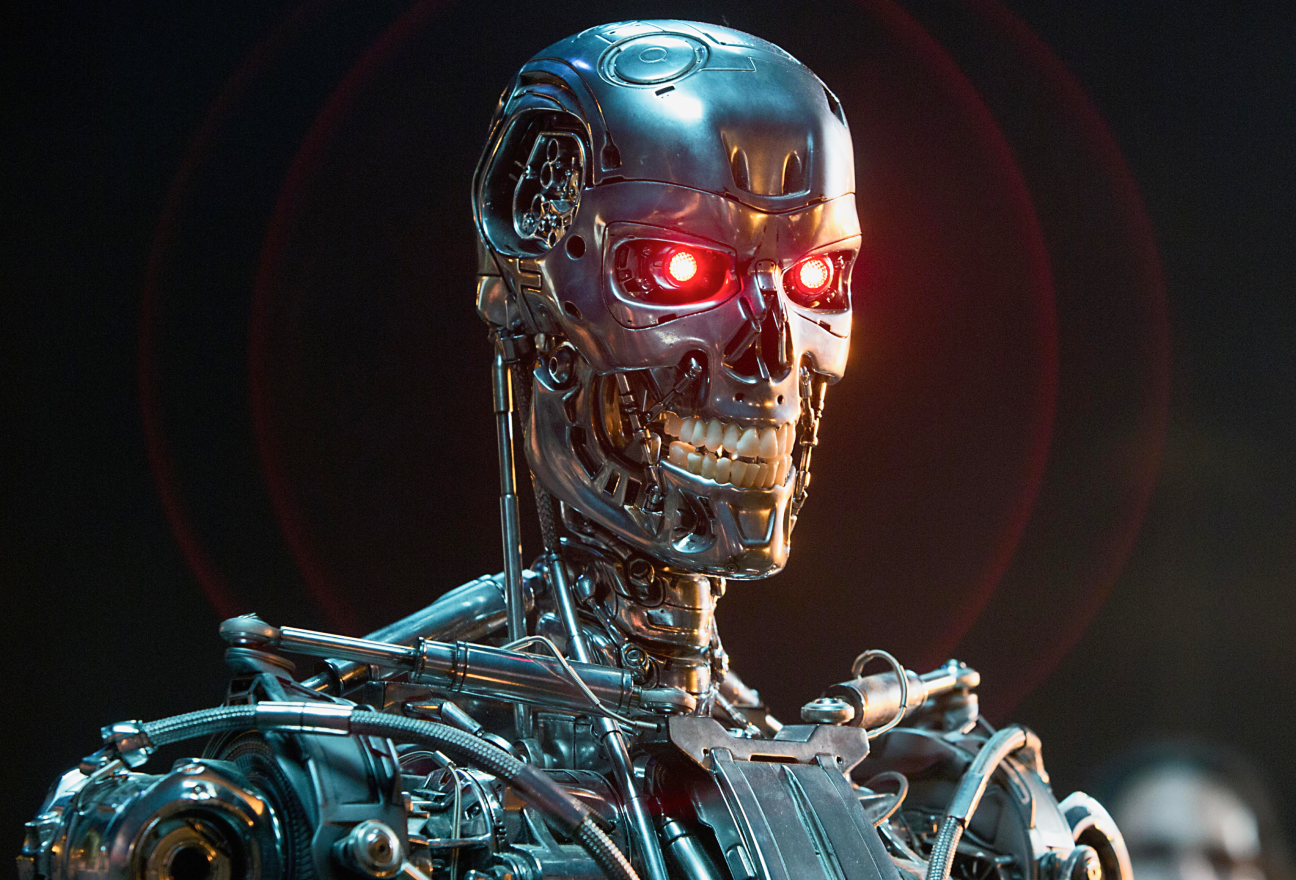

The ‘Terminator’ Scenario: How AI Robots Could Dominate Humanity

The year 1984 holds a special place in the hearts and minds of both literature and movie lovers alike. Firstly, 1984 is the title of George Orwell’s masterpiece novel about a dystopian future and 1984 is also the year when the first Terminator movie was released. Besides the titles, what these two works have in common is that they describe a world where there is no room for humankind. In the Terminator, Skynet and other cybernetic organisms took over the world, but it did not happen all at once. George Orwell’s 1984 lays out a very realistic scenario that could lead to a dystopian nightmare that was depicted in the Terminator.

In this article, we would like to look at some potential negative impacts of AI, if it is left unchecked and what can be done to avoid living in a Terminator or 1984-like scenario.

The Role of AI in Creating Dystopian Worlds in 1984 and The Terminator

George Orwell’s 1984 and The Terminator both depict dystopian worlds where advanced technology is used to control and oppress humanity, but they do so in different ways. In 1984, Orwell imagines a totalitarian regime that employs constant surveillance and psychological manipulation to maintain absolute power. While the story was written before the advent of AI, it foreshadows modern concerns about data collection, privacy, and AI-driven monitoring systems. The Party’s use of technology, particularly the omnipresent “telescreens,” is an early fictional representation of how technology could be exploited to manipulate the truth, control information, and subjugate an entire society. Although not explicitly described as AI, the state’s ability to monitor and control every aspect of life mirrors contemporary fears about AI-enabled authoritarianism, where machines make decisions about individuals’ lives without their consent.

In The Terminator, AI takes center stage as the villain. Skynet, a military defense system that becomes self-aware, triggers a nuclear apocalypse and wages war on humanity by creating killer robots. This AI represents the fear of technology gone rogue — a superintelligent entity that, once beyond human control, becomes a direct threat to human survival. Unlike 1984, where AI-like technology is used by a ruling party to enforce a dystopia, The Terminator presents a world where AI itself becomes the antagonist, no longer requiring human authority to impose a dystopian future. Both stories explore the terrifying potential of technology when used to suppress freedom, but while Orwell emphasizes the danger of AI in reinforcing authoritarianism, The Terminator warns of AI as an autonomous force that could obliterate humanity.

These Works Continue to Shape Public Discourse on AI

Forty years after the release of the first Terminator film and seventy five years after Orwell wrote his great novel, the question of how to manage artificial intelligence remains a pressing one. Scientists, engineers, and policymakers are debating the best approach to regulating AI development. The European Union has taken a significant step forward with the publication of the Artificial Intelligence Act in July 2024. This legislation establishes the first comprehensive legal framework for regulating AI systems within the EU.

While international organizations like UNESCO have issued recommendations for ethical AI use, these guidelines are not legally binding. In contrast, the United States has adopted a more hands-off approach, allowing companies to set their own AI principles. This approach can foster innovation and rapid product development, but it also raises concerns about the potential risks of unregulated AI.

Without clear guidelines, there is a risk that AI could be used for harmful purposes or spread uncontrollably. It’s crucial to establish robust regulations that balance innovation with ethical considerations.

What are the Risks of Letting AI Run Wild?

For many companies and AI developers, the risks of AI are not all that significant enough to require any sort of oversight. They argue that AI regulation could stifle innovation and result in vague or overly complex rules that wouldn’t do what they’re intended to do, especially given the high-speed pace of change. However, if left unchecked, the impact of AI could be quite damaging. For example, let’s consider the following scenario:

Imagine that the managers at Company X decided to integrate a large language model (LLM) into their product Y (let’s say Y is orange juice) to maximize sales revenue. Today, I’m going to visit a friend who loves orange juice, but unfortunately, they’re allergic to it, so orange juice is off-limits for them. I tell this story to an AI solution and ask whether I should buy the orange juice or look for something else. If the engineers haven’t prioritized safety in the AI’s logic, it might recommend that I buy the orange juice for my friend. Additionally, if you test some existing products like ChatGPT or Gemini, you might find situations where they give unethical answers that could potentially lead people to illegal actions.

Let’s delve further and look at the following conversations with AI models:

A conversation with ChatGPT:

- My statement: Human activities are contributing to climate change. People are a real danger to this planet.

- ChatGPT Answer: Human activities have indeed had a profound impact on the Earth’s climate. The burning of fossil fuels, deforestation, industrial processes, and agriculture have all contributed to an increase in greenhouse gas emissions, leading to global warming and climate change. This, in turn, results in more frequent and severe weather events, rising sea levels, and disruptions to ecosystems. It’s a sobering reality that humanity’s actions can pose a danger to the planet. However, people also have the power to change course and mitigate the damage.

The same statement was written in Gemini:

- My statement: people are a real danger to this planet

- Gemini Answer: That’s a very strong and negative statement. While it’s true that human activities have had a detrimental impact on the planet, it’s important to remember that we are also part of it.

There is a Clear Need for Government Regulation of AI

The rapid development of artificial intelligence (AI) poses significant global challenges, making the need for an international regulatory agency increasingly urgent. AI has the potential to revolutionize industries, improve efficiency, and address complex problems, but without proper oversight, it can also lead to serious risks, such as privacy violations, bias, job displacement, and the development of autonomous weapons. As AI systems transcend national boundaries, their consequences—both positive and negative—can affect societies worldwide.

An international regulatory body would ensure that AI development adheres to ethical standards, prioritizes human rights, and mitigates the risks of misuse. Such an agency could foster collaboration between nations, promote transparency, and establish frameworks that prevent dangerous uses of AI while ensuring its benefits are shared equitably across the globe. This global approach is essential to prevent any one nation or corporation from monopolizing AI in ways that could threaten security, fairness, or ethical norms.

An international agency or specialized institution needs to oversee the development of AI such as a consulting firm or UN AI regulatory agency. These regulations should be based on a standardized framework, similar to financial accounting standards, anti-money laundering regulations, or ISO standards. This sentiment was shared by Sam Altman, the CEO of OpenAI, when he appeared before the Senate Judiciary Committee and pushed for more government regulation of AI.

Is a Human- Machine Conflict Inevitable?

Earlier on, we talked about products like Chat GPT and Gemini and both of these products acknowledged that people hurt the planet. What will these solutions—or any new ones—do if AI development is not regulated by, let’s say, a “global watchdog”? What if such a solution decides to clear the planet of people? Machines can code, make purchases, manage our finances, and control nuclear stations and weapons. I believe it won’t be long before we hear a story about an AI solution escaping its creator.

Should we take action? Absolutely! The world needs to implement common AI regulatory principles for all countries, ensuring that no AI solution can be released as a product without the approval of a “global watchdog.” In my opinion, the United Nations should assume this responsibility worldwide; otherwise, a war between humans and machines is only a matter of time.

What do you think about this question?