Reinforcement learning with human feedback (RLHF) for LLMs

Reinforcement Learning, with Human Feedback (RLHF) has an impact on the enhancement and refinement of language models (LLMs) by experts. By incorporating input into the training process RLHF bridges the gap between machine learning algorithms and human judgment ensuring that models align more closely with user expectations and ethical considerations. This approach does not enhance the relevance of generated language. Also addresses the nuanced intricacies of human communication that algorithmic methods often overlook. As demand grows for AI systems that’re user friendly and responsive RLHF emerges as a technique in fostering the robust and responsible growth of LLMs.

In this article we will delve into the concept of reinforcement learning from human feedback and its pivotal role in advancing LLM development.

Introduction to RLHF in Large Language Models

Reinforcement Learning with Human Feedback (RLHF) revolutionizes the training language models to follow instructions with human feedback by instructing them based on feedback. Unlike methods, on extensive internet sourced datasets, which can lead to powerful yet biased, inaccurate and ethically questionable models. RLHF addresses these issues by incorporating feedback during the training process leading to nuanced and contextually accurate responses. When humans are involved in the loop, models not produce correct and contextually appropriate text but also better align with human values and ethical standards.

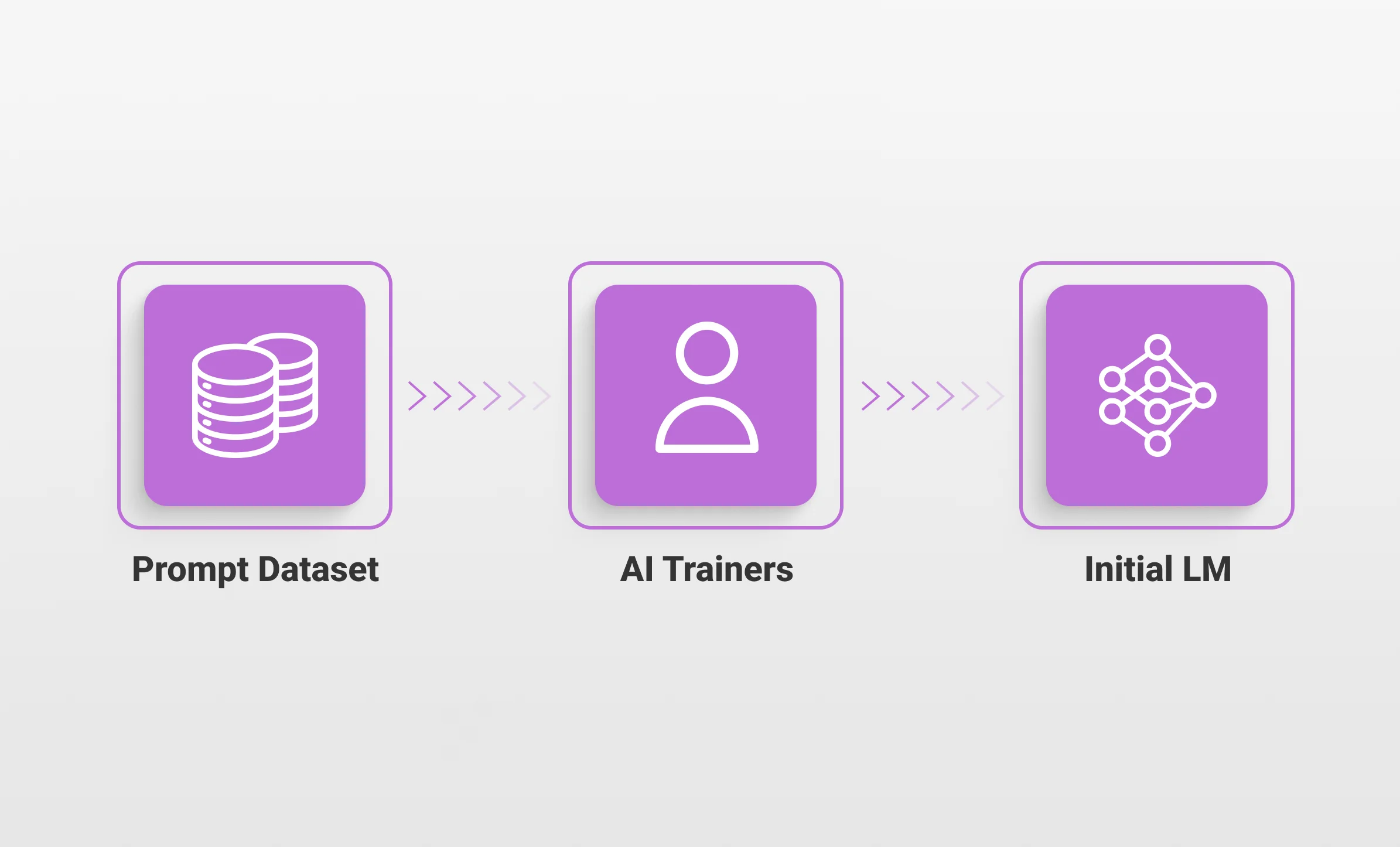

Implementing RLHF in language models involves steps. Initially experts train the model on a dataset using machine learning techniques. Subsequently the model undergoes tuning where individuals provide feedback on its generated output. This feedback helps adjust the models settings through reinforcement learning systems enabling it to generate responses that meet human expectations. For instance if the model produces a biased response human trainers can identify it for improvement so that the model avoids errors in the future. This iterative process of input and machine adaptation results in a refined and reliable language model of assisting users more effectively in tasks ranging from customer support to storytelling.

Exploring the Fundamentals of RLHF

RLHF AI commences, with a trained model that has been trained on extensive datasets using conventional supervised learning methods. Subsequently it transitions into the RLHF phase where human evaluators assess its output and provide feedback. The feedback is then used to provide signals that help the model learn. For instance if a language model generates a response that a human evaluator deems satisfactory and contextually relevant the model receives feedback. On the hand , if the response is deemed, off topic the model receives negative feedback. Through processes of receiving feedback and making adjustments the model learns to generate output that aligns with expectations and ethical standards.

By incorporating input RLHF addresses some of the limitations of algorithm driven training methods, such as outcomes and responses that are disconnected from the context. This approach enables models to grasp nuanced aspects of communication resulting in AI systems that’re more robust, reliable and sensible. This technique proves valuable in domains where human values and ethical considerations hold importance by ensuring that AI behaviors remain consistent, with norms and user preferences.

The Importance of Human Feedback in Training LLMs

The importance of reinforcement learning with human feedback in training large language models (LLMs) cannot be overstated, as it plays a critical role in refining these models to better align with human values, expectations, and ethical standards. While LLMs are initially trained on massive datasets that encompass a wide range of texts from the internet, this data often contains biases, inaccuracies, and contextually inappropriate content. Purely algorithmic training can result in models that perpetuate these issues, leading to outputs that may be technically accurate but socially or ethically problematic. Human feedback addresses these shortcomings by providing nuanced guidance that algorithms alone cannot achieve.

Supplementing the training with human feedback is helpful to the LLM as it enables the LLM to learn from human experience and endorsement. Human evaluators can remove bias in process, filter out obscene stuff, and make suggestions that may be beyond the computation of AI. It aids in making certain that the models create meaningful and syntactically correct text while at the same time creating outputs that are ethical, culturally sensitive, and conforming to the current standards of the society. For instance, when a language model provides an offensive/biased response, corrections will prevent the model from saying such things in the future leading to responsible AI actions. This is a cyclical process of feedback necessary for creating LLMs that are not only effective but also reliable and created with the focus on the principles that can be considered valuable by humans.

Methods of Implementing RLHF in Language Models

Implementing human feedback reinforcement learning in language models involves a series of structured steps designed to integrate human input effectively into the model’s training process. One common method begins with a pre-trained language model that has been initially trained on large, diverse text datasets. This pre-trained model serves as the baseline. The next step involves generating outputs from this model, which are then reviewed by human evaluators. These evaluators provide feedback by ranking the outputs based on quality, relevance, and appropriateness. The feedback is then used to fine-tune the model through reinforcement learning algorithms, where the model is rewarded for producing desirable outputs and penalized for generating undesirable ones.

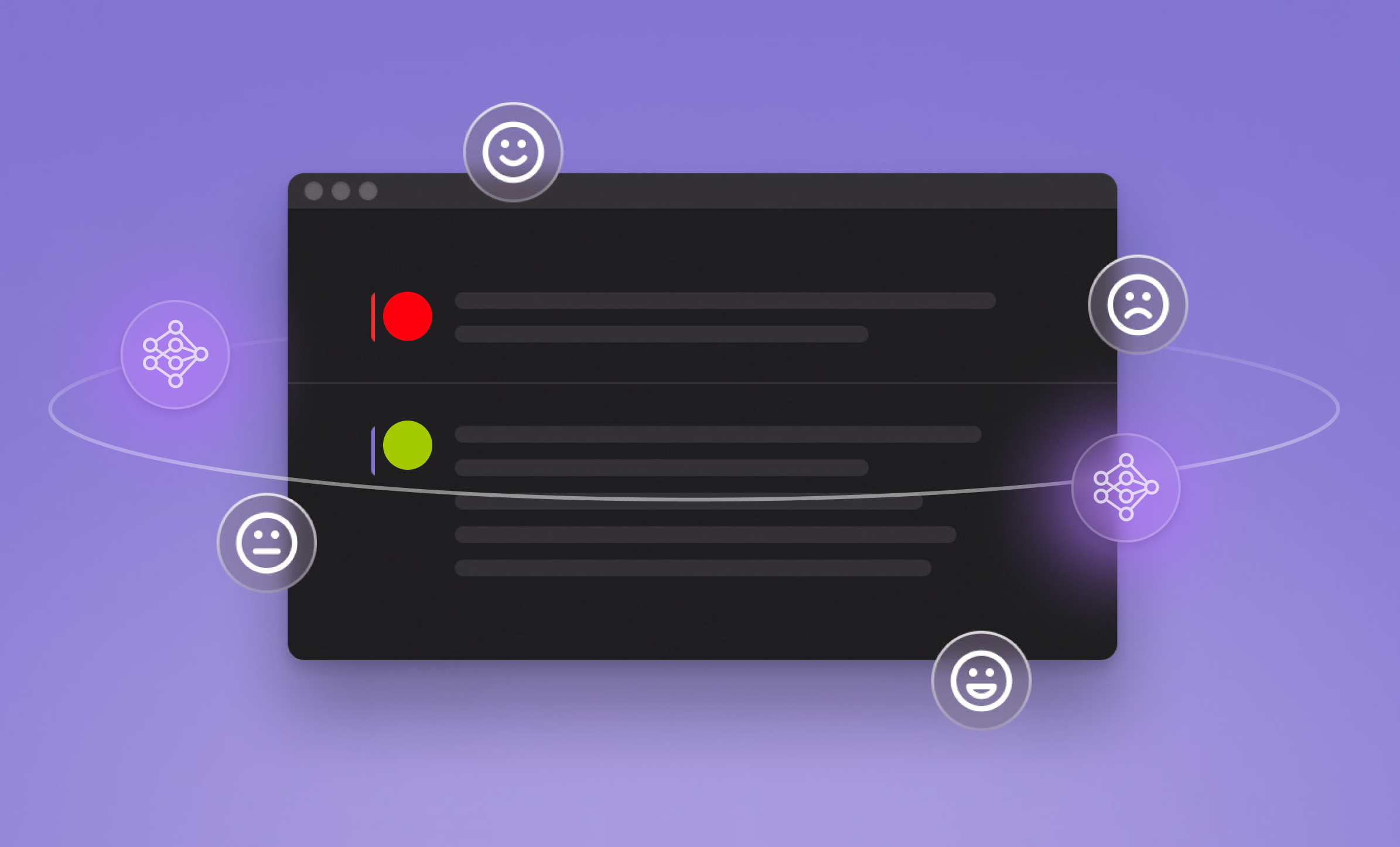

Particularly, another technique is called preference learning. In this case, pairs of the outputs produced with the help of the model are shown to human raters; in turn, these raters have to mark which of the two outputs is preferable in the given context. This preference data is then used to train a reward model which estimates what humans would prefer. The language model is then trained using reinforcement learning, the guidance being in the form of the reward model. It assists in updating the language model to be in a better alignment with human preferences and, thus, values. Also, there are repeated training processes, during which the model provides new outputs, receives feedback, and is consequently amended. Thus, this constant process helps preserve the correspondence of the proposed model with new people’s expectations and behaviors.

Challenges and Solutions in RLHF for LLMs

Reinforcement learning human feedback in large language models presents several significant challenges. One primary challenge is ensuring the consistency and quality of human feedback. Human evaluators may have varying levels of expertise, biases, and perspectives, which can lead to inconsistent feedback that complicates the model’s learning process. To address this, it is crucial to establish rigorous training programs for evaluators, standardizing the feedback protocols to ensure uniformity and reliability. By creating clear guidelines and comprehensive training materials, organizations can help evaluators provide more consistent and high-quality feedback, which in turn helps the model learn more effectively.

Another key issue is how to incorporate human evaluations in the training of the massive-scale models. Considering the number of data samples and the specificity of LLMs, it is ineffective to use only qualified assessors for such evaluation. To avoid this, however, more sophisticated forms of supervised training include active learning and semi-supervised learning. In active learning the student selects the most informative data that should be run to the teacher to get feedback hence increasing the value of every feedback given. Semi-supervised learning makes use of relatively little amount of labeled data (which is given by the human volunteers/evaluators) together with a large amount of unlabelled data for better outcomes. Also, the implementation of other automated utilities which could help in pre-processing and filtering of various model productions before being passed on to human review would help to optimize the use of human resources in the feedback loop. Pool these solutions together in that they aid in the scaling of the RLHF process, which is consequently more realistic and productive in its capability to train complex and advanced LLMs.

Case Studies: Successful Applications of RLHF in LLMs

Case studies of human reinforcement learning in large language models (LLMs) highlight the transformative impact of this approach on improving model performance and alignment with human values. One notable example is OpenAI’s development of GPT-3 and GPT-4, where RLHF was employed to fine-tune the models. By integrating human feedback into the training loop, these models were able to produce more accurate, contextually relevant, and ethically aligned responses. Human evaluators provided feedback on various outputs, which helped the models learn to avoid generating biased or inappropriate content. This iterative feedback process significantly improved the models’ ability to handle complex and sensitive topics, resulting in safer and more reliable AI applications.

One of the application areas that have been successfully applied to RLHF is the customer service chatbots. Such an approach has been used by companies such as Anthropic to improve their computer aided customer support. With the incorporation of data from humans, these chatbots have been modernized to handle customers’ inquiries much more humanely and efficiently. Mainly, the chatbot’s responses were reviewed and rated by evaluators for their relevance and usefulness, and the information provided was used to improve the models. This has also enabled greater user understanding and thus, the ability to get the right information to the right customer at the right time and defuse an otherwise annoying interaction. The incorporation of RLHF in these applications not only enhances the customers’ experience, but also highlights the significance of human-in-the-loop techniques in building better and safer AI solutions.

Measuring the Effectiveness of RLHF in Language Learning

Measuring the effectiveness of LLM reinforcement learning involves a multi-faceted evaluation framework that assesses both qualitative and quantitative aspects of model performance. One key metric is the accuracy and relevance of the generated responses, which can be evaluated through automated metrics such as BLEU, ROUGE, and perplexity scores, as well as human assessments of response quality.

Further, refinements of the model’s compliancy to human values and ethical práctises are again significant markers of success. This can be measured through satisfaction of users’ feedback forms, correspondence of outputs to the ethical criteria set in advance and the overall decrease of discriminatory or gross inadequate results. A/B testing of two versions of the model, for instance, can help in evaluating the extent based on which the RLHF process has improved the model’s functions. With all of these methods in place, developers are able to receive a broad estimate of how well RLHF is training the model and enhancing its alignment with what people expect.

Future Directions in RLHF AI

Future directions for reinforcement learning from human feedback are expected to increase the utility and ethical character of RLHF models even further. An area for potential development lies in the feedback mechanisms’ developments beyond ‘user feedback’, to, for example, real-time user interaction and in a contextual sense, where timely and potentially continuous feedback could be seen as providing more meaningful cues to the AI systems. Furthermore, technical challenges related to the scalability of large models with complex training processes will be addressed by further developments in tools and techniques for collecting feedback, such as the crowdsourcing and automatic or semi-automatic feedback. Furthermore, investigations into whether the stability and bias of AI are improved in the context of RLHF will also be important for exploring issues of fairness in AI further. Furthermore, integrating RLHF with emergent approaches like transfer learning and meta-learning, may also enable the development of models that not only learn from human instruction more effectively but also across tasks and/or domains more effectively. In the future, RLHF will play an even more important role in the development of AI technologies that are robust and ethical.

Resources and Tools for RLHF Implementation

Implementing reinforced learning from human feedback involves a variety of resources and tools designed to facilitate the integration of human input into AI training processes. Key resources include specialized frameworks and libraries, such as OpenAI’s RLlib and Hugging Face’s Transformers, which provide robust support for reinforcement learning and model fine-tuning.

The tools for managing human feedback, for example, Amazon Mechanical Turk and Scale AI, have become prolific in the collection of evaluative data at scale. Quality control for human feedback and evaluator consistency can be provided through platforms with evaluator training and feedback standardization, such as Prolific or Appen. Furthermore, a variety of data analytics and visualization tools are available that can help to monitor and understand model performance and feedback impact, some of which include TensorBoard or Weights & Biases. These resources and tools together make implementing RLHF more efficient and allow for developers to build more responsive, accurate, and ethically conscious AI systems.

Conclusion

By looking at how RLHF has been added to the big and advanced LLMs, this work is the next step. Plus with human control in RLHF you get better specificity and usefulness of the model’s outputs and reduces the risk of the AI and ML systems to misbehave or cause harm as it aligns with human values and ethics. As these approaches are further developed and refined RLHF will bring more solutions to AI and we will get better models that are more human-like. So the changes in RLHF must prove the importance of human input in directing the progress of AI and that AI is in a technical and ethical improvement.