How Co-Training Can Help Make Progress in Autonomous Driving

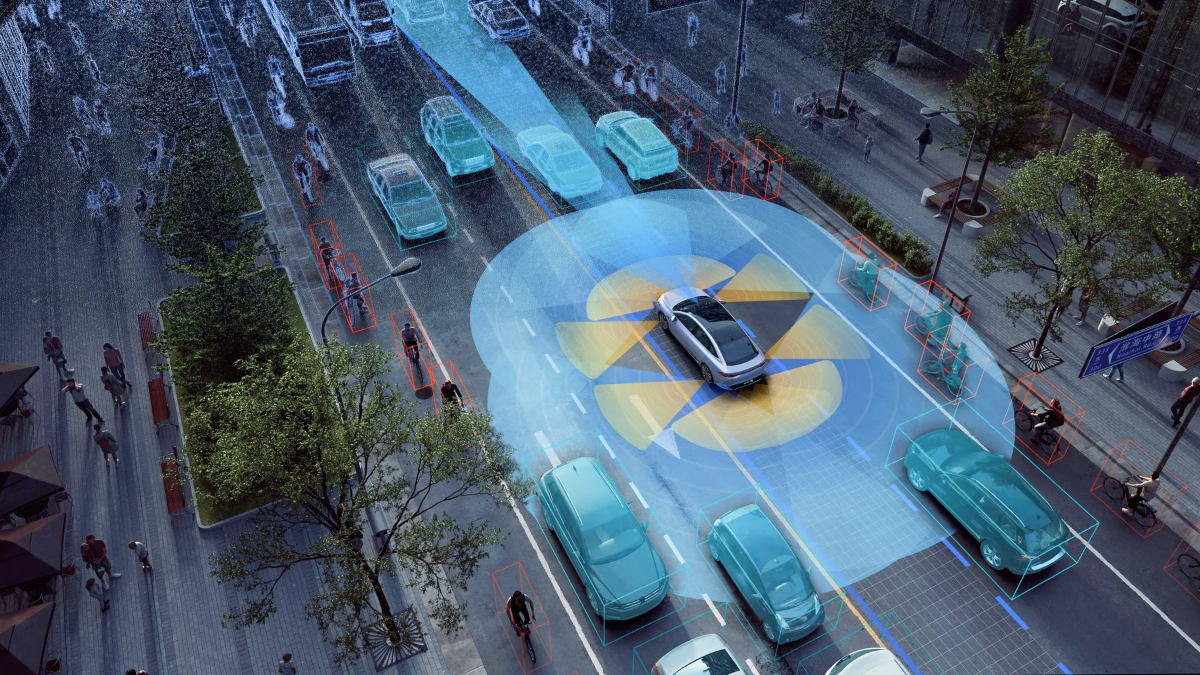

Autonomous vehicles are exciting and terrifying at the same time because they have to accurately sense and navigate a constantly changing environment. An important component of autonomous driving is computer vision, which uses computation to extract information from imagery. It can be used for low-level tasks like figuring out how far away a location is from the car, or for higher-level tasks like figuring out whether a pedestrian is on the road.

In this article, we will talk about how self-driving cars can be trained to understand the data annotation needed to create them.

What is Co-Training?

Co-training is, essentially, using two techniques simultaneously to train a machine learning algorithm. For example, one research team at the Washington University in St. Louis used the following two techniques: stereo matching and optical flow. Stereo matching is an essential step in depth estimation for obstacle avoidance, producing maps of disparities between two images. In order to determine how objects and the camera are moving, optical flow, which estimates per-pixel motion between video frames, is helpful.

This is a new training approach using image-to-image translation between artificial and natural image domains for optical flow estimation and stereo matching. Our method uses only ground-truth information from synthetic images to train models that perform well in real-world image scenarios. In order to support both task-specific component training and task-agnostic domain adaptation, we present a bidirectional feature warping module that can handle left-right and forward-backward directions. Test results demonstrate competitive performance compared to prior domain translation-based techniques, supporting the usefulness of our suggested framework that successfully combines the advantages of optical flow estimation, stereo matching, and unsupervised domain adaptation.

What is the Benefit of Using Co-Training for Machine Learning?

When an autonomous vehicle is on the road, it needs to be able to perform a wide range of things, such as locating pedestrians and cars, deciding road affordability for driving, detecting the lanes to precipitate a driving action, and many other things. In addition to cutting down on training and inference times, concurrent learning of several tasks can serve as a regularizer to ensure that generalizable representations are learned. It is suggested that an all-in-one architecture, in addition to being simple and efficient, can improve the robustness of the driving system by inadvertently discovering the relationships between disparate tasks.

Having said that, it is important to note that a wide range of non-trivial factors, such as model architectures, data augmentations, convergence properties, hyperparameters, and many others, affect how well multitask learning performs. Over time, domain shifts that degenerate the performance of visual models have made it more difficult to provide ground truth supervision for training visual models. This was the case when visual tasks relied on hand-crafted features and shallow machine learning. Because deep learning is data-hungry, the issue still exists within the deep learning paradigm despite its unprecedented performance gains. The co-training method could help overcome these limitations and make autonomous vehicles a reality.

What Types of Data Annotations are Required for Autonomous Vehicles?

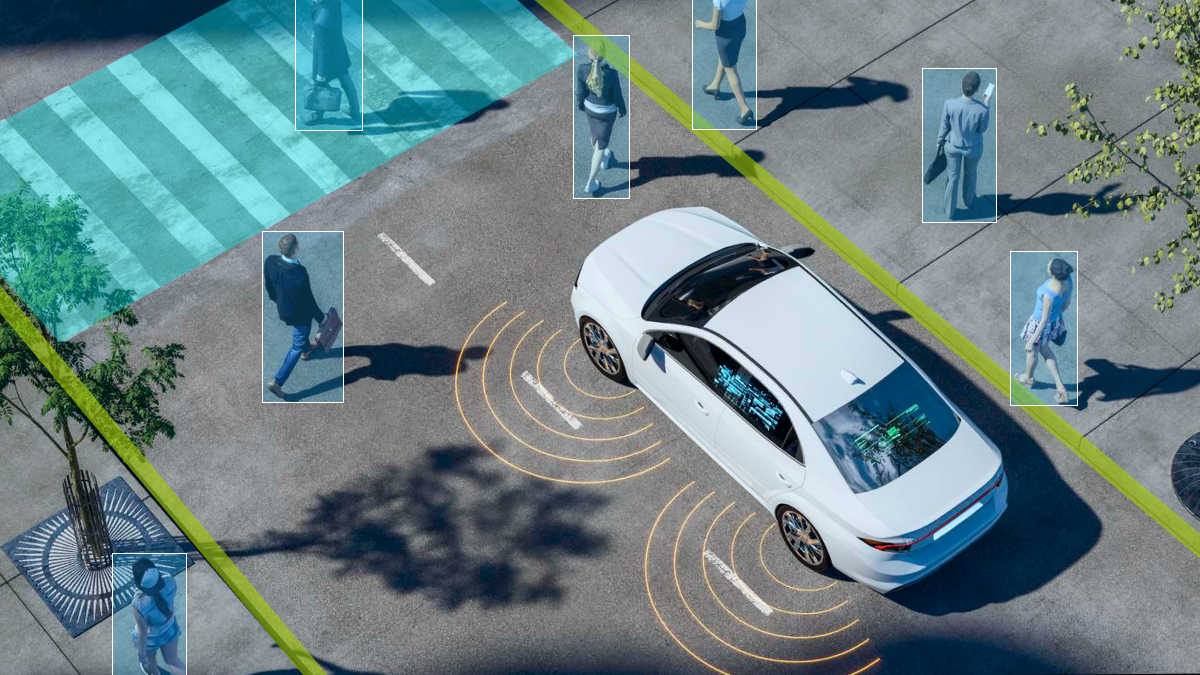

One of the main types of data annotation used in the automotive industry is polyline annotation. This method is applied to video and images to define linear structures. It traces the shape of structures like pipelines, railroad tracks, and roads using tiny lines joined at vertices. Using labeling tools and annotation platforms, annotators apply these lines to images. It is necessary to identify these lines in every frame of the video training data.

Semantic segmentation will also be necessary, which is a deep learning algorithm that gives each pixel in an image a label or category. It is employed to identify a group of pixels that make up different categories. It is used to identify cars, pedestrians, traffic signs, pavement, and other road features in the automotive vertical.

Trust Mindy Support With All of Your Data Annotation Needs

Mindy Support is a global provider of data annotation services and is trusted by Fortune 500 and GAFAM companies. With more than ten years of experience under our belt and offices and representatives in Cyprus, Poland, Romania, The Netherlands, India, OAE, and Ukraine, Mindy Support’s team now stands strong with 2000+ professionals helping companies with their most advanced data annotation challenges.