Can Autonomous Vehicles Make Common Sense Inferences?

When we are behind the wheel, we are always anticipating what other drivers may or may not do. This form of reasoning is something that modern-day autonomous vehicles are lacking. However, researchers in Germany and India were able to combine reasoning with machine learning to overcome some of the obstacles preventing autonomous vehicles from becoming mainstream. Let’s take a closer look at the new methodology to understand how it takes us a step closer to fully autonomous vehicles hitting the road.

Implementing Visual Sensemaking in Autonomous Vehicles

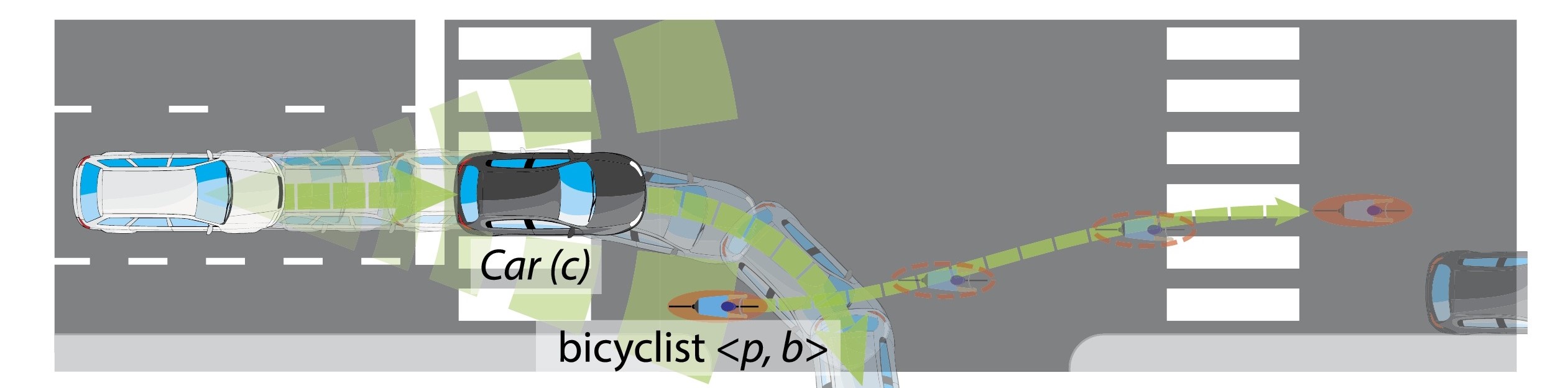

In order to better understand the type of decisions autonomous vehicles will have to make on the road, let’s explore the image below:

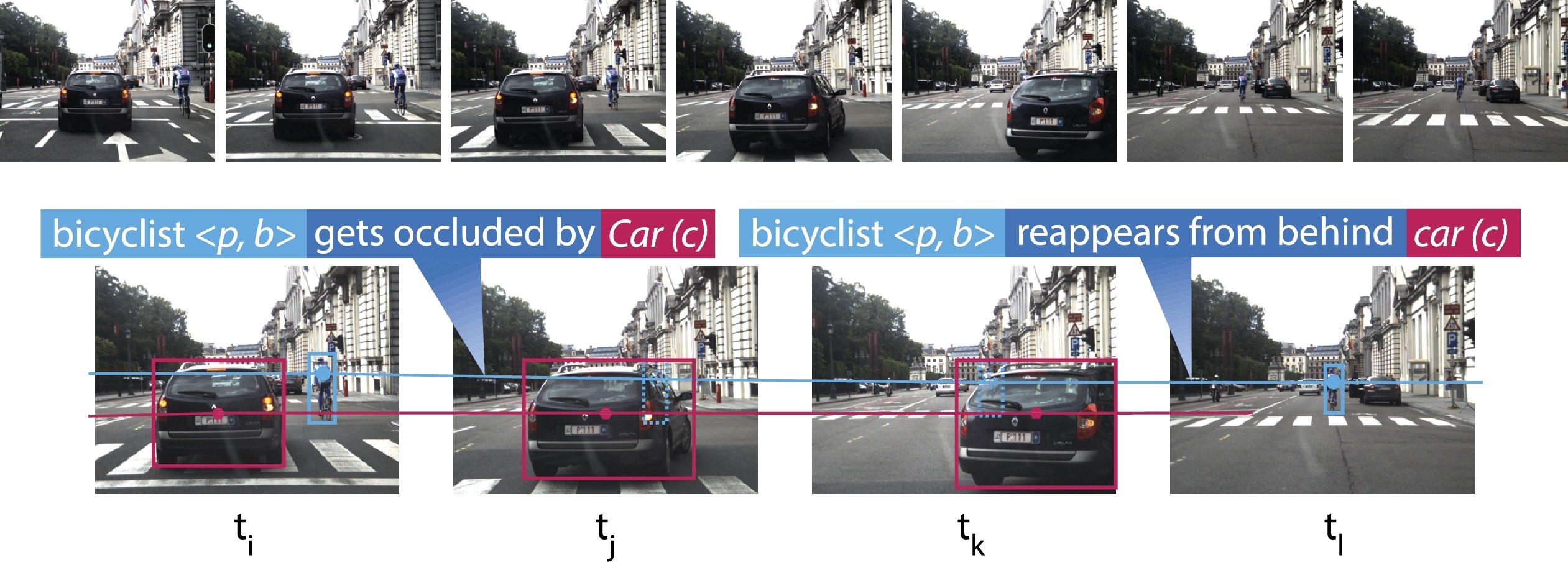

In this image, I see that a car (c) is trying to make a right turn, but there is also a cyclist to the right of the car who is moving forward. Now, when car (c) makes the right turn, it will also temporarily occlude the cyclist, making it difficult for the white car behind it to make the right decision on what to do. This scenario is illustrated in the image below:

The way researchers have solved this problem is by using technology like LiDAR, radar, and cameras for object and lane detection as well as semantic segmentation to track the motion of each object and calculate the right decision for each scenario.

How Do LiDARS, Radars, and Cameras Facilitate Autonomous Driving?

LiDAR technology is very important to the development of autonomous vehicles and is used by some of the leading self-driving car manufacturers such as Waymo, Hyundai, Kia, and many others. LiDAR is a sonar that uses pulsed laser waves to map the distance to surrounding objects. It is used by a large number of autonomous vehicles to navigate environments in real-time. It’s also highly suitable for 3D mapping, which means returning vehicles can then navigate the environment predictably — a significant benefit for most self-driving technologies. LiDAR is the technology that allows the car to determine the distance between it and other objects on the road.

Cameras are also used in autonomous vehicles, most notably in Teslas. Autonomous vehicles rely on cameras placed on every side — front, rear, left, and right — to stitch together a 360-degree view of their environment. Some have a wide field of view — as much as 120 degrees — and a shorter range. Others focus on a more narrow view to provide long-range visuals. Some cars even integrate fish-eye cameras, which contain super-wide lenses that provide a panoramic view, to give a full picture of what’s behind the vehicle for it to park itself.

Radar is also used in autonomous vehicles, but it has limitations, most notably when radio waves are transmitted and bounced off objects, only a small fraction of signals ever gets reflected back to the sensor. As a result, vehicles, pedestrians, and other objects appear as a sparse set of points. There is also a problem with noise i.e. it is common to see random points, which do not belong to any objects, appear in radar images. The sensor can also pick up what are called echo signals, which are reflections of radio waves that are not directly from the objects that are being detected.

LiDAR and Camera Data Require Data Annotation

LiDARs create a 3D Point Cloud which is a digital representation of how the car sees the physical world. However, you would need to annotate this 3D Point Cloud with methods such as labeling, 3D segmentation, semantic segmentation, and many others to teach the machine learning algorithms to identify the objects on the road. Video data also needs to be annotated with 2D/3D bounding boxes, tagging, lines and splines, and many other methods as well. Video data annotation can be very time consuming because very often each frame of the video would need to be annotated and if the video resolution is 30 frames per second (fps) or even 60 (fps), you can imagine how much time it would take to annotate all of this data.

Trust Mindy Support With All of Your Data Annotation Needs

As mentioned earlier, in-house data annotation could be very time-consuming which is why so many companies outsource their data annotation projects to Mindy Support. We are the largest data annotation company in Eastern Europe with more than 2,000 employees in eight locations all over Ukraine and in other geographies globally. Contact us today to learn more about how we can help you.