Can a Machine Learn New Skills Like a Human? Google Says Yes!

Google has recently unveiled a new AI model, called Pathway AI, that is capable of completing a much greater array of tasks than the singular focused designs that are widely used. With this new model, Google envisions stitching together the specialized systems currently used into a multimodal generalist. The flexibility might enable the AI to perform like a human brain, with the benefits and drawbacks of a neural network that implies. Let’s take a closer look at Pathway AI to understand what makes it so breakthrough and what its capabilities may include.

What’s So Great About This New Architecture?

If we think about the way ML models are currently developed, they are trained to perform a specific task. Even if we take a multi-functional AI system, as an autonomous vehicle, for example, we see that such an advanced system is made up of many ML algorithms each of which performs a particular task. For example, there are pattern recognition algorithms that are designed to rule out unusual data points. Recognition of patterns in a data set is an important step before classifying the objects. These types of algorithms can also be defined as data reduction algorithms.

There are also clustering algorithms that can take low-resolution images, very few data points, or discontinuous data and detect and locate the object. This is very important because sometimes the images obtained by the system are not clear and it is difficult to detect and locate objects. It is also possible that the classification algorithms may miss the object and fail to classify and report it to the system. Whatever the reason may be, this clustering algorithm will help the system piece together disparate data points into understandable information.

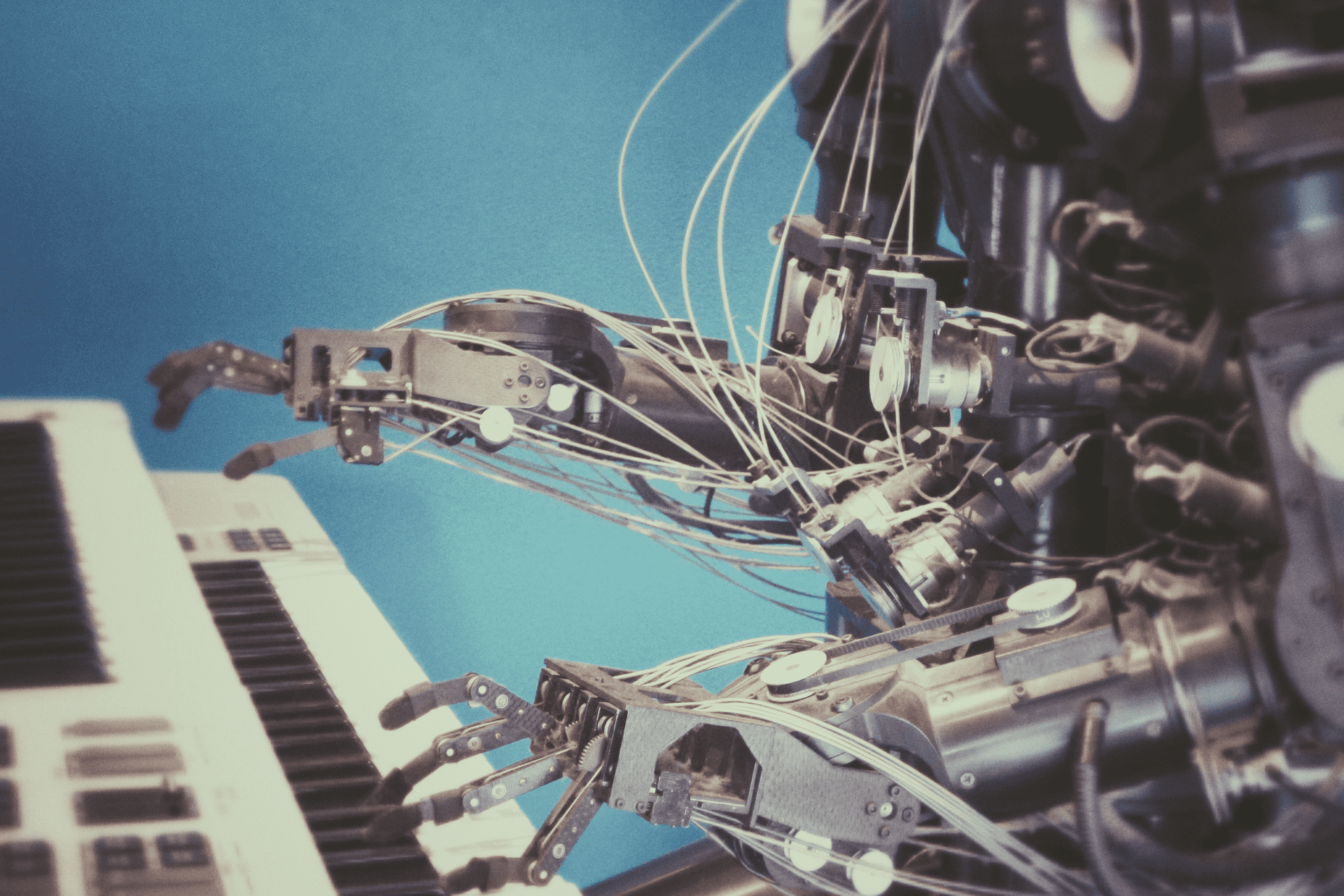

Now, let’s imagine a system that does not need to rely on certain algorithms for classification, on another set of algorithms to organize the data into groups of maximum commonality, a third group to make a decision, and so on. What if a single model could do thousands or even millions of things and draw upon and combine its existing skills to learn new tasks faster and more effectively? This is basically what the human brain can do. With the release of Pathways AI, Google engineers took one step closer to giving machines such human-like possibilities.

What New Capabilities Can We Expect From Pathways AI?

According to Google, Pathways AI can condense all of those single-minded algorithms into a versatile neural network that can multitask its duties and how it learns. This means that there would no longer be a need to train each new model from nothing to do one thing and one thing only and have to start from scratch every time you want to add a new feature. Imagine an AI system that can take skills that it’s already learned and leverage this knowledge to learn new skills.

Today, a typical neural network can process either text, audio, or video but not all three. Google sees Pathways as evolved enough to collate all three kinds of data and understand how they interact. All three power the decision-making within the Pathways neural network. The data gathering can translate from one format to another, so Pathways could be used on its own or to augment existing systems that struggle with enough data collection without opening up to new modes of communication. This opens the door for new business possibilities for AI. In fact, research from Accenture shows that AI could enable people to use their time efficiently, which will increase their productivity by 40%. The Pathways technology is a big step towards achieving such a goal.

Which Types of Data Annotation Pathways AI Require?

Since the purpose of this architecture is to enable an AI model to enable multimodal models that encompass vision, auditory, and language understanding simultaneously, it will require text, image, video, and audio annotation. For example, let’s imagine the system is processing the word “automobile”. It would need to respond to someone saying automobile, which requires sound labeling. It could see an automobile in a video and need to identify it, which requires 2D/3D bounding box annotation. The videos, images, texts, and audio are the sense it uses to process all of the information and perform the needed tasks.

Mindy Support Provides Comprehensive Data Annotation Services

Regardless of the volume or type of data, you need to be annotated or the complexity of your project, Mindy Support will be able to assemble a team for you to actualize your project and meet deadlines. We are the largest data annotation company in Eastern Europe with more than 2,000 employees in eight locations all over Ukraine and in other geographies globally. Contact us today to learn more about how we can help you.