A Driverless Future for Public Transportation

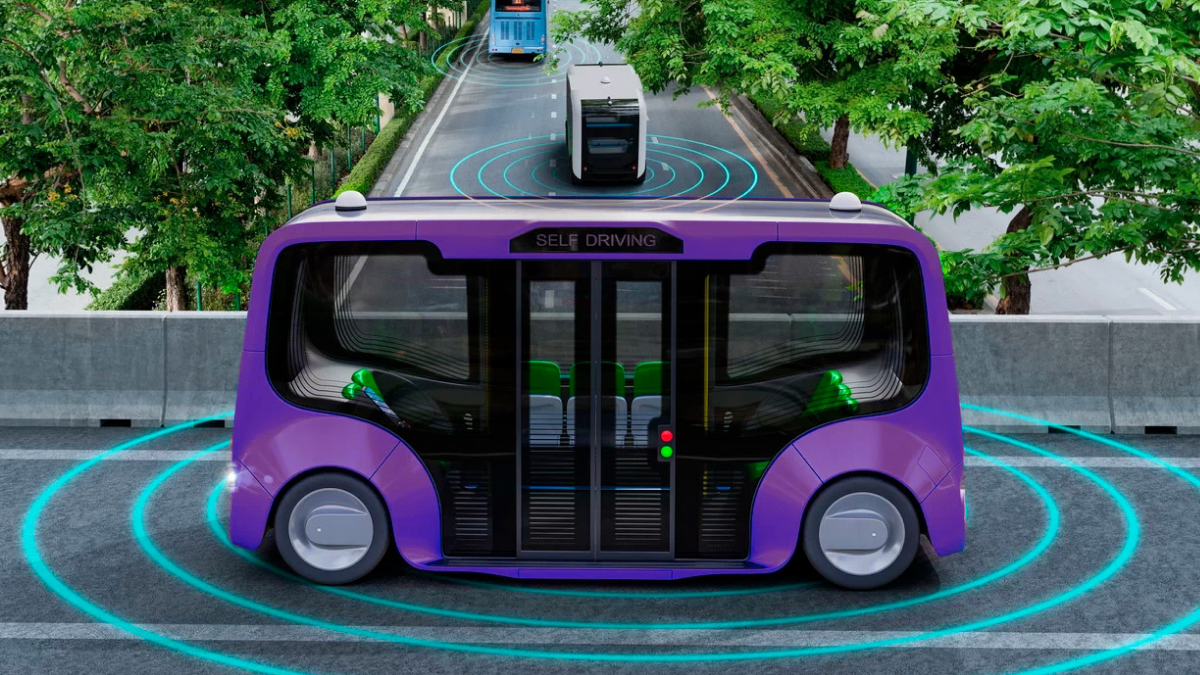

While autonomous cars get most of the attention, there is a good chance we might see self-driving buses on the road before fully autonomous cars. The reason for this is that there are fewer barriers to overcome. Since a bus always operates on a fixed route, the ML algorithms will need to understand fewer road scenarios. In addition to this, the bus does not need to go very fast, and since they’re bigger and heavier, it could feel like a safer venue for passengers to get used to the lack of a human driver. In this article, we will take a closer look at the implementation of autonomous buses and the data annotation required to create them.

Autonomous Buses Will Soon Be on the Streets of Seoul

The Seoul city government launched its first commercial self-driving bus service last month with some electric shuttles operated by a startup backed by Hyundai Motors. This is the latest step in South Korea’s efforts to make autonomous vehicles mainstream. The buses can carry as many as seven passengers and will initially circulate on a 2.1-mile loop around Cheonggye Stream, which is a busy tourist hotspot in downtown Seoul. During the initial launch period, rides will be free, and passengers can hop on the bus at two stops along the route after booking a seat on a mobile app.

These buses rely on 12 cameras and six radar sensors to view the road and plot out real-time images of cars and pedestrians. What’s also interesting is that the Cheonggye Stream buses are capable of Level 4 automation, which means that they can self-drive under most conditions.

Edinburgh is Also Aiming to Implement Autonomous Buses

Another interesting implementation of autonomous buses is in Edinburgh, where Enviro200 buses will soon hit the road. These buses will run on a longer route than the ones in Seoul, which will be 14 miles as part of Stagecoach East Scotland’s scheduled bus network. The five buses will still have safety drivers on board, along with a bus “captain” who will move around the interior, talking to passengers about the autonomous tech on board and answering questions.

The vehicles themselves feature an automated drive system by Fusion Processing dubbed CAVstar, which features LiDAR, radar, and optical cameras.

What are the benefits of Autonomous Buses?

One of the biggest advantages of autonomous buses is sustainability. On-demand autonomous shuttles can be booked in advance or when needed. It reduces unnecessary trips carried out by fixed-route buses or buses that serve low-demand areas. The demand and supply of public transport services are better matched to optimize limited transport resources. In addition to this, autonomous buses could improve road safety by employing many cameras, radar, mapping software, and LiDAR in and around the vehicle, while smoother, fully autonomous driving may improve fuel efficiency, emissions, and rider comfort.

Improving the safety record of transit buses would lower operation costs through lower insurance and crash expenses, in addition to the qualitative effects that improved safety can provide. It is estimated that fully autonomous technology can lower overall crash expenses for private vehicles by 40%.

What Types of Data Annotation are Necessary to Create Autonomous Buses?

If we look at the first example of autonomous buses in Seoul, these buses rely on computer vision cameras to see the road and everything around them. Training computer vision algorithms require techniques ranging from simple tagging to more complex types of annotations, like lines and splines, which allows the AI system to understand road markings. Semantic segmentation may also be necessary to annotate every pixel of an image with an object class. These classes could be things like other cars on the roads, pedestrians, cyclists, and many other things.

In terms of the second example, with autonomous buses in Edinburgh, these buses use LiDAR technology which creates a 3D Point Cloud. Data annotators would need to annotate these 3D Point Clouds with 2D boxes, cuboids, and semantic segmentation. It may also be necessary to color code the 3D Point Cloud, which allows the AI system to understand the proximity of each object to the LiDAR. For example, objects that are close may be colored blue since this color has a short wavelength. Objects farther away may be red or orange since these colors have long wavelengths.

Trust Mindy Support With All of Your Data Annotation Needs

Mindy Support is a global company for data annotation and business process outsourcing, trusted by Fortune 500 and GAFAM companies, as well as innovative startups. With nine years of experience under our belt and offices and representatives in Cyprus, Poland, Romania, The Netherlands, India, OAE, and Ukraine, Mindy Support’s team now stands strong with 2000+ professionals helping companies with their most advanced data annotation challenges.