Level 4 Autonomous Vehicles: Where are We Now and What Can We Expect in 2022

According to a report by IDTechEx, called Autonomous Cars, Robotaxis & Sensors 2022-2042 Level 3 automation capabilities were ready as far back as 2017. This means that even four years ago, we had the technical capabilities to equip cars with environmental detection and enable them to make informed decisions for themselves, such as accelerating past a slow-moving vehicle. This begs the question: how come we’re not seeing autonomous vehicles becoming mainstream? In this article, we will try to answer this question and also take a look at the state of Level 4 automation and what the future holds.

What is Level 4 Automation?

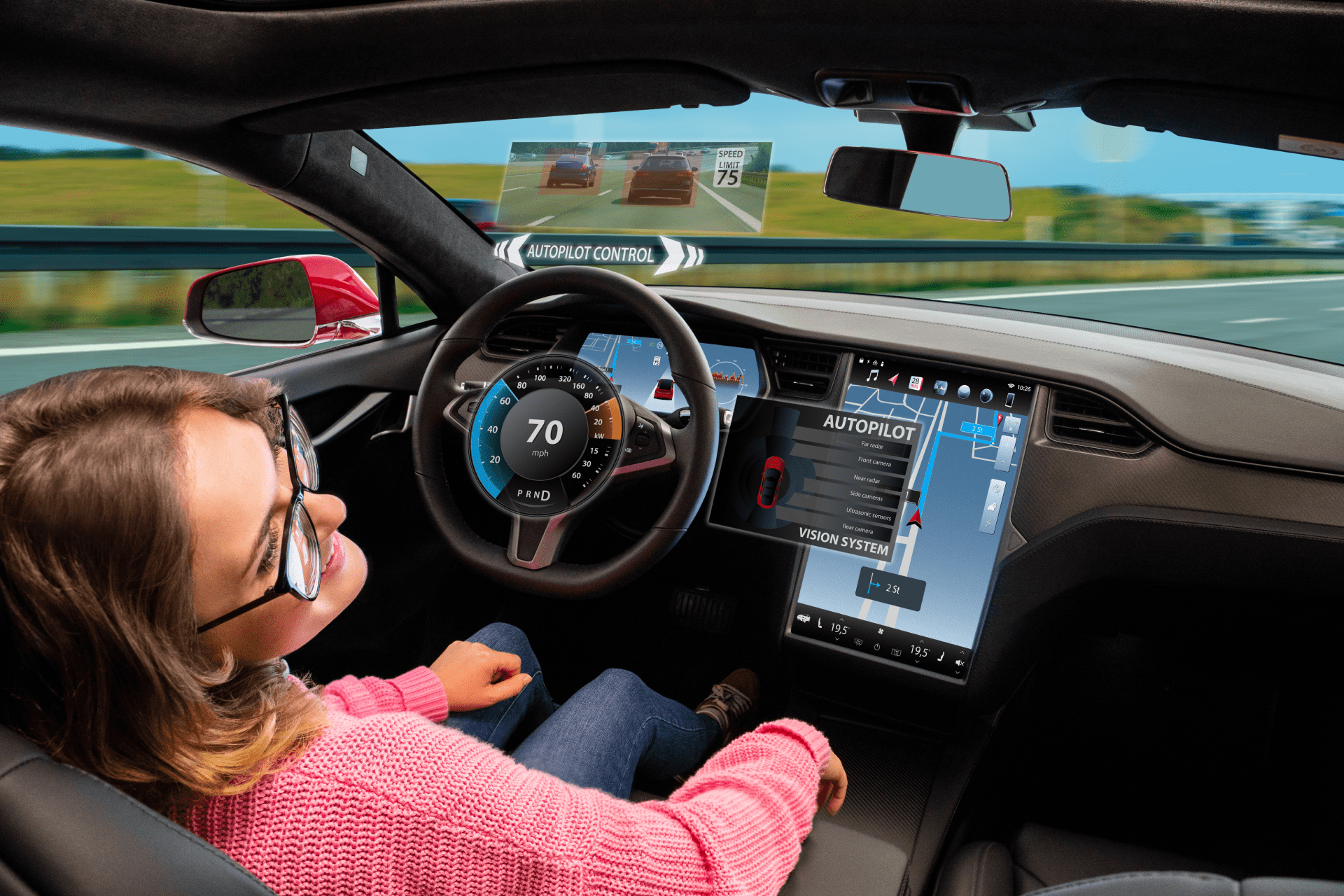

The biggest difference between Level 3, mentioned earlier, and Level 4 is that in the latter vehicles can intervene if things go wrong or there is a system failure. In this sense, these cars do not require human interaction in most circumstances. However, a human still has the option to manually override. In addition to the technical challenges, car manufacturers also have to deal with the current laws and regulations which do not foresee possible accidents caused by autonomous vehicles.

To get around this problem, autonomous vehicle developers created a closed environment, called geofencing, where the car would be free to drive. A great example of this is what Waymo is doing in Arizona where they have been testing driverless cars―without a safety driver in the seat―for more than a year and over 10 million miles.

Are There Any Level 4 Vehicles Today?

As of today, there’s only one company testing Level 4 vehicles in real-world conditions, which is Oxbotica. They are implementing a government-backed project in the UK, where the autonomous vehicles will actually be on the road with the regular cars. Six Ford Mondeos outfitted by autonomous tech developer Oxbotica and its partners will operate on a nine-mile circuit from Oxford Parkway station to the city’s main train station. The Level 4 prototypes will drive in day and night conditions. Let’s see what these cars look like:

On the roof of the car, we see the LiDAR, which is a remote sensing method that uses light in the form of a pulsed laser to measure ranges (variable distances) between the car and an object. These LiDARs create a 3D point cloud that would need to be annotated by human data annotations. This includes techniques ranging from simple labeling to more advanced methods such as segmentation and object classification.

Having said this, it’s worth pointing out that, according to J.D Power, as of May 2021, no vehicles sold in the U.S. market have a Level 3, Level 4, or Level 5 automated driving system. All of them require an alert driver sitting in the driver’s seat, ready to take control at any time. If you believe otherwise, you are mistaken, and it could cost you your life, the life of someone you love, or the life of an innocent bystander.

What Can We Expect From Autonomous Vehicles in 2022?

It looks like we are still a long way from seeing Level 4 automation on the road, but let’s look at some of the most promising AI-enabled vehicles we can see in 2022. First of all, the cars created by General Motors will be equipped with Super Cruise, which includes an adaptive cruise control that will speed up and slow down the vehicle to maintain a driver-selected distance from the vehicle ahead. It also has a lane-keeping system that tries to center the car in its lane even through curves in the road, and automatic emergency braking that brakes the car in an attempt to avert a collision.

Still, this is only Level 2 automation since it requires the driver to stay alert and keep their hands near the wheel. It includes a driver monitoring system that watches the driver’s eyes and warns them if their attention seems to be drifting from the road.

We actually provided some data collection and annotation for a company working on such a product. For this project our client needed data about peoples’ eye reactions to external factors in a state of varying degrees of fatigue and under the influence of various factors. In order to assemble the needed data, our team members would not sleep for an extended period of time, and then we would record and annotate all of the signs of fatigue and drowsiness. You can read more about this in our previous blog article.

Perhaps the most we can expect in the near future is to see more advanced models in geofenced conditions since there are fewer parameters that need to be accounted for in such situations.

Mindy Support Provides Comprehensive Data Annotation Services

Whether you need LiDAR, videos, images, or any other type of data annotation, Mindy Support’s got you covered. We are the largest data annotation company in Eastern Europe with more than 2,000 employees in eight locations all over Ukraine and in other geographies globally. Contact us today to learn more about how we can help you.