Video Content Moderation in 20+ Languages for Large Video-Sharing Platform

Company Profile

Industry: Video Sharing / Digital Media

Location: Global (HQ in North America, operations worldwide)

Size: Millions of videos uploaded daily; active in 100+ countries

Company Bio

This client operates one of the world’s most recognized video platforms, offering creators and audiences a space to share ideas, stories, and real-time content. But with the platform’s scale came complexity – hundreds of hours of video were uploaded every minute, in dozens of languages and formats. Their internal moderation systems were powerful, but not perfect. They needed human judgement, linguistic precision, and operational scalability – all without compromising on speed or accuracy.

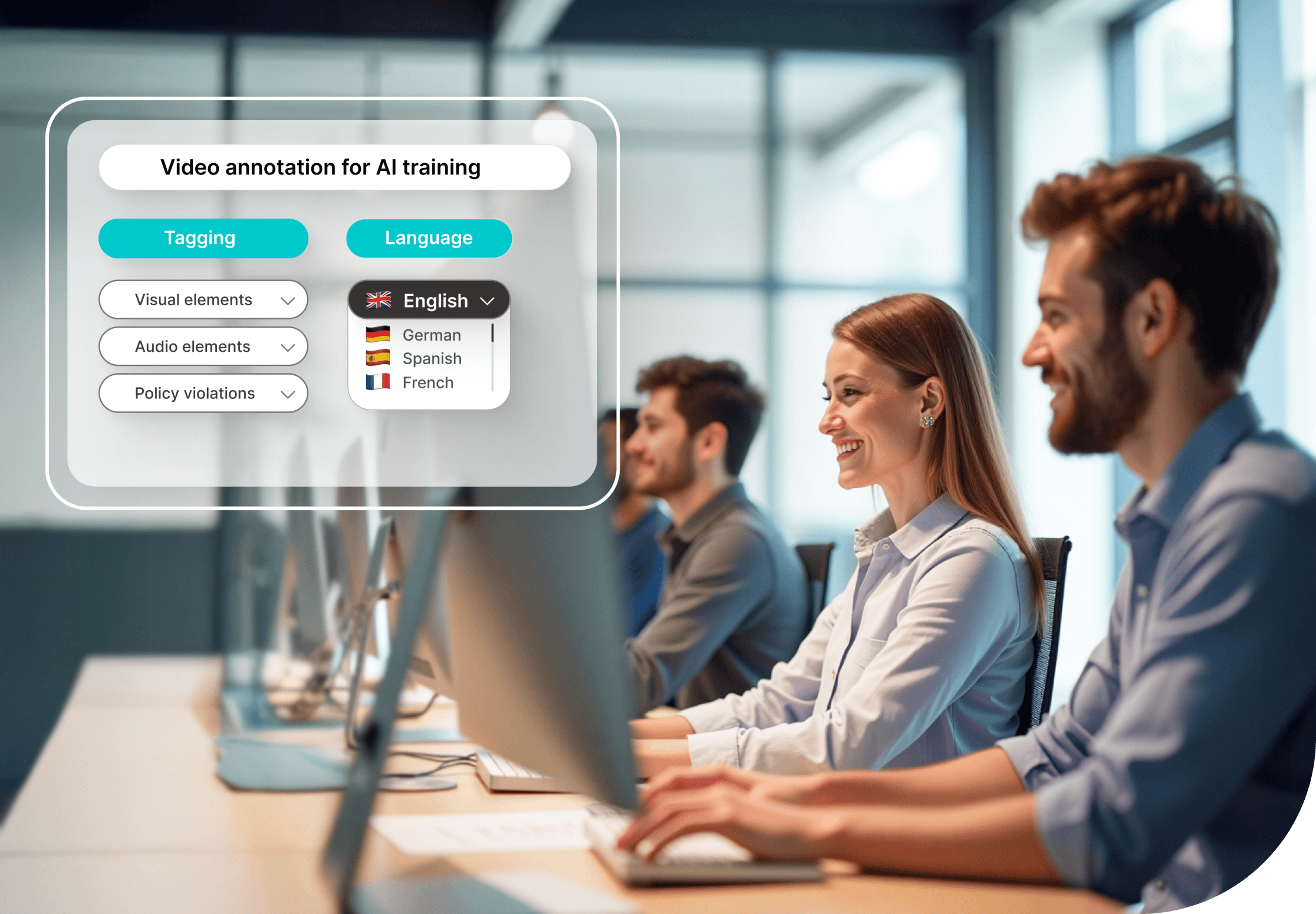

Services Provided

Human-led video content moderation in 20+ languages, High-volume data annotation for AI/ML systems, Content flagging, categorization & triage workflows, Real-time livestream and escalation review, Custom localization of moderation guidelines, Automation-augmented quality review and LLM feedback loops

Project Overview

When we first spoke with the client, they were honest: “We’ve built incredible automation – but our edge cases are growing, and they’re hard to catch.” What they needed wasn’t just a vendor – they needed a thought partner with the ability to blend linguistic intuition with technical fluency. That’s where Mindy Support came in.

We brought more than just moderators – we brought linguists, cultural experts, and policy interpreters trained to make sensitive calls in real time. From political misinformation in Spanish to borderline satire in Japanese, we helped the client navigate the global complexity of content moderation – at speed and scale.

Business Problem

- Contextual blind spots in automation: The platform’s AI missed nuance – irony, sarcasm, cultural cues – especially across languages.

- Livestream risks rising: Real-time events needed real-time judgement – bots weren’t fast or flexible enough.

- Inconsistent policy enforcement: As guidelines changed, retraining internal teams across time zones proved challenging.

- Global expansion demanded linguistic accuracy: Misinformation, hate speech, and policy violations often appeared in localized forms that automation couldn’t interpret.

Why Mindy Support

We approached the challenge from both sides – human and machine.

- We built multilingual moderation teams from our global talent pool – not just fluent speakers, but linguists trained in media context, regional slang, and cultural norms.

- We integrated with the client’s automation stack, using AI to pre-screen content and direct human review where it mattered most.

- We refined policy guides with real-world feedback, helping the client evolve their moderation playbook as new types of content emerged.

- We ensured security, compliance, and transparency at every step, aligning with GDPR, COPPA, and local content laws.

Solutions Delivered to the Client

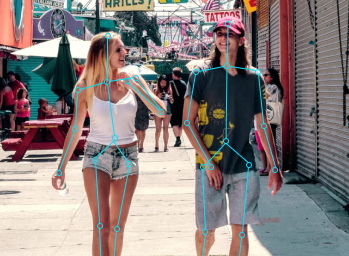

1/ Human-Led Moderation with Real Linguistic Expertise

We handpicked native-speaking moderators for each target region — trained not just on platform policy, but on local nuance. A video flagged in Arabic might require cultural context; a satirical upload in French needed tone recognition. Our linguists made the difference.

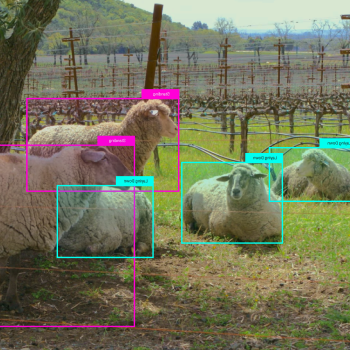

2/ Data Annotation to Power the Client’s AI

Every piece of reviewed content was also an opportunity to train the client’s models. We annotated thousands of clips for visual elements, audio cues, intent, and compliance — creating clean, usable training data for their moderation AI.

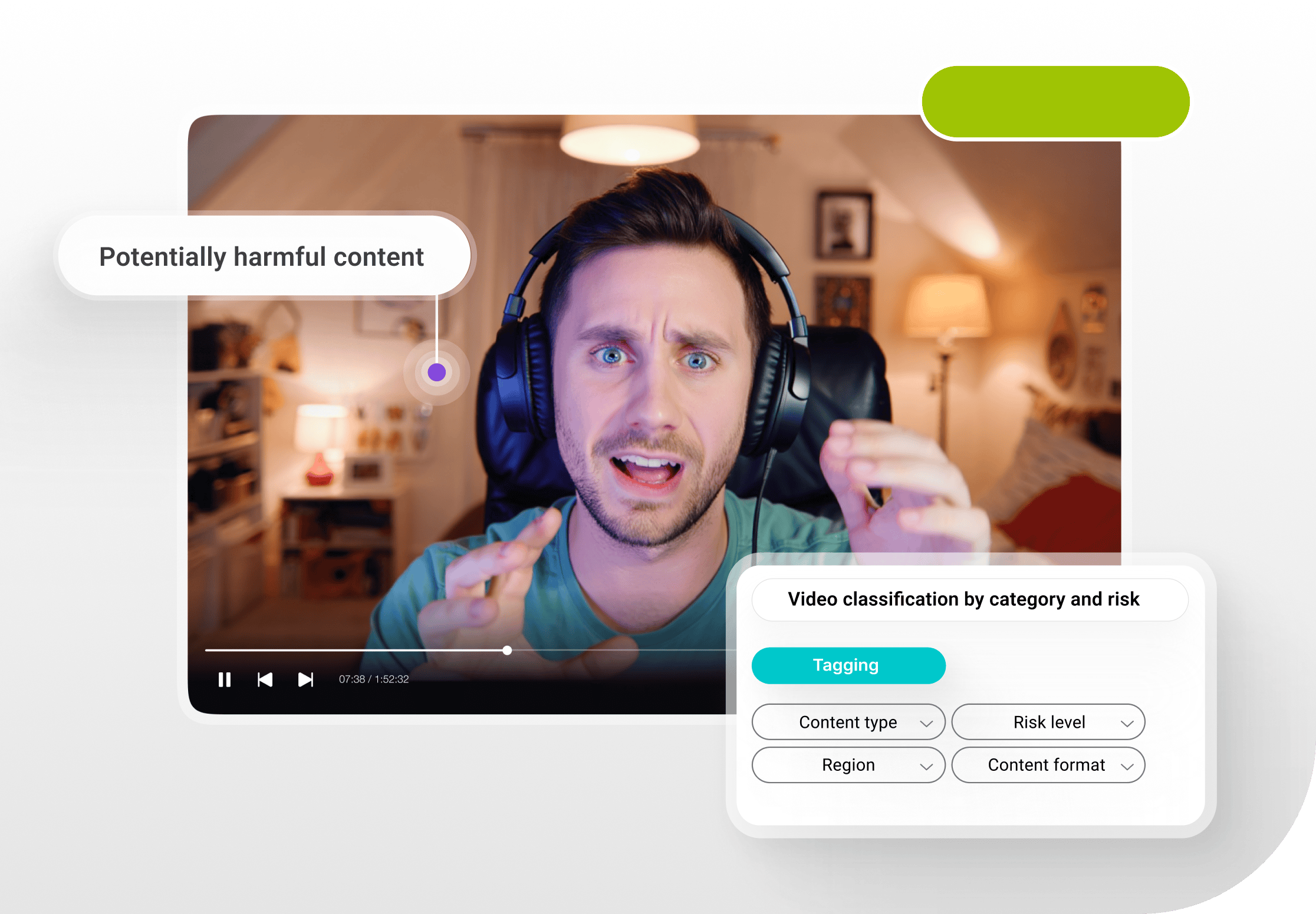

3/ Structured Categorization and Risk Triage

We didn’t just say “violates policy” – we explained why. Content was tagged by category, severity, region, and risk type. This allowed internal teams to prioritize escalations and better manage sensitive content pipelines.

4/ Livestream & Escalation Review in Real Time

With live events, there’s no room for delay. Our specialists were positioned to review flagged livestreams in under 60 seconds, ensuring dangerous content never reached wide circulation.

5/ Customized Guideline Localization

The client’s policy team created global standards – we helped translate them into region-specific workflows. This kept enforcement consistent but sensitive to cultural and legal variations.

6/ LLM-Aware Feedback Loops

We tracked where the client’s automated systems got things wrong – and used that data to improve them. By feeding edge-case corrections and linguistic context back into the AI pipeline, we helped the client’s LLMs get smarter, faster, and more reliable.

Key Results

- 99% Accuracy Rate on Human Review Tasks

Our moderators consistently met or exceeded client QA targets, with near-zero critical errors. - 70% Reduction in False Positives from AI

Thanks to our data feedback and training sets, the client’s automation became more accurate over time. - Sub-60 Second Latency on Livestream Moderation

Our team’s responsiveness helped the client prevent harmful or sensitive content from going viral. - 20+ Languages Covered with Region-Specific Moderation

From Korean to Portuguese, we supported nuanced decisions in native context. - Continuous Improvement via LLM Feedback Loops

Our annotation data didn’t just stop at review – it powered the next wave of smarter AI moderation. - Trusted Long-Term Partner

We remain the client’s go-to vendor for multilingual video moderation, and continue to expand our scope.

GET A QUOTE FOR YOUR PROJECT

We have a minimum threshold for starting any new project, which is 735 productive man-hours a month (equivalent to 5 graphic annotators working on the task monthly).