Annotating Personally Identifiable Information in 13 Languages

Client Profile

Industry: Technology innovation

Location: USA

Size: 50,000+ employees

Company Bio

The client is a Fortune 500 company with delivery centers across North America, Asia, and Europe. They deliver strategic technology and business transformation solutions to their customers, enabling them to operate as leaders within their fields and empowering them to transition to new technologies, embrace new service delivery models, and enhance the business value provided by IT.

Services Provided

Project Overview

Mindy Support’s data annotation team meticulously labeled personally identifiable information (PII) in 13 languages to train the client’s AI tool. With an accuracy rate surpassing 99% and no need for rework, our experienced team ensured all necessary information was correctly annotated. Thanks to their diligent efforts, the client saw a notable enhancement in the quality of their training data, leading to overall improvements in their AI product’s quality.

Business Problem

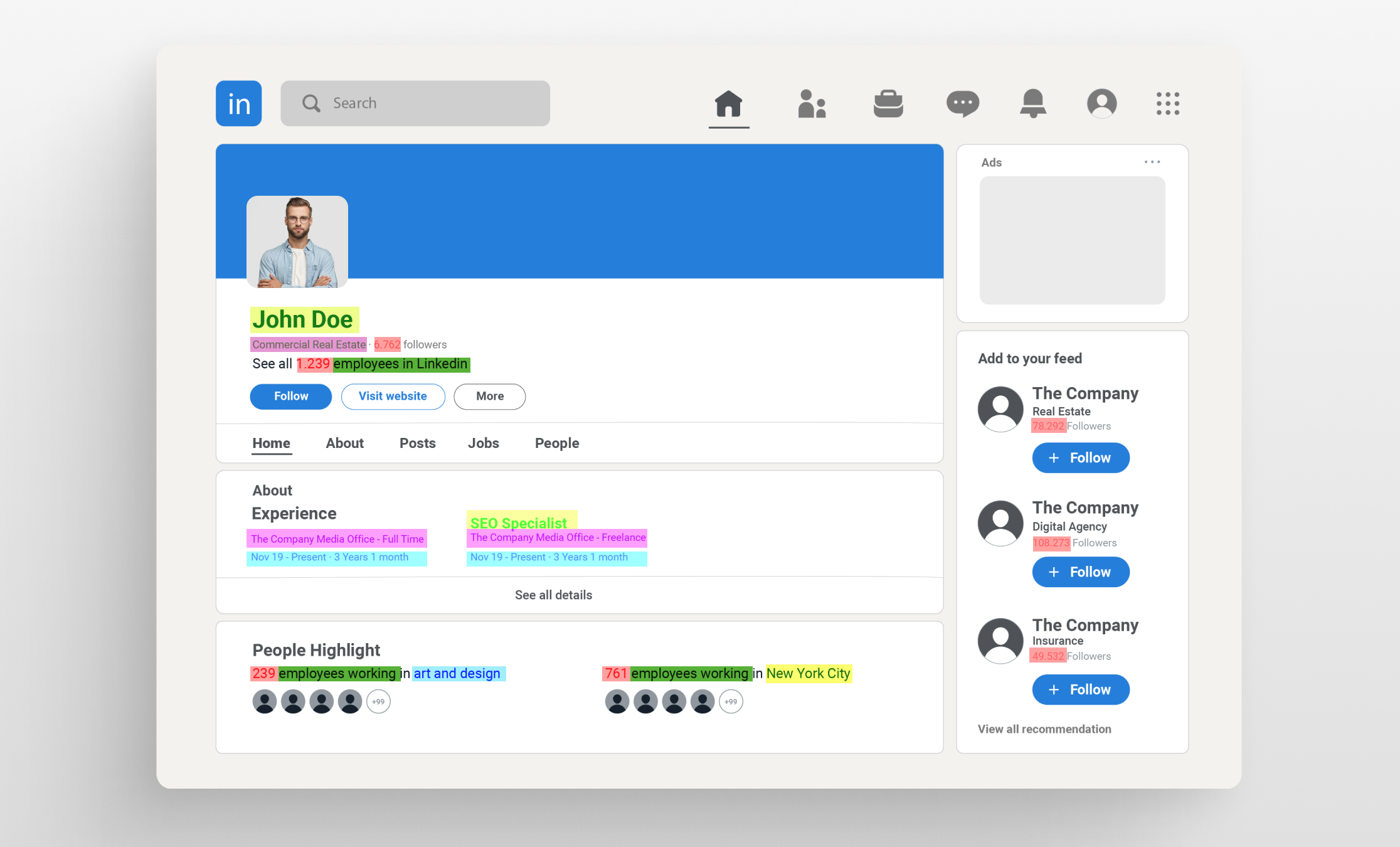

The client was developing an AI solution that could extract PII from all kinds of forms, images, SMS messages, and other means of communication. To help protect customer identities. In order to train this new solution, the client had a large dataset of 10,000 images containing PII in 13 languages. They were looking for a reliable data annotation provider to detect all PII in different text datasets. These usually consisted of pieces of data from social media, messages, shared images, and other means of communication. The PII data needed to be categorized depending on the type of data, i.e., mark addresses, names, ID numbers, etc. One important condition from the client was not to mark well-known data, such as names of famous people in the target language region.

The entire dataset needed to be annotated within a strict time frame of 7 months and have an accuracy rate of 98%+.

Why Mindy Support

Mindy Support’s collaboration with this client extends beyond the current project. We have previously executed a successful OCR project for them, encompassing 13 languages. Leveraging our extensive experience in OCR technology we delivered accurate results and met stringent deadlines. This longstanding partnership has fostered trust in our ability to consistently deliver exceptional results within specified timelines. With our proven track record of excellence, the client confidently entrusted us with the task of annotating personally identifiable information (PII) for their AI tool.

Solutions Delivered to the Client

Mindy Support assembled a team of 7 data annotators with extensive experience in named entity recognition to get the work done. We started training our team on how to use the client’s data annotation platform, which needed MacOS devices for all steps of the project (annotation and QA). Even though this was the first project with the OS condition from this client, our internal IT teams easily solved this problem by expanding MacOS devices in the company. In addition to the training on the client tool, we also provided our team with additional assistance by bringing in language experts for each needed locale, who helped the team identify all PII information with a greater level of accuracy.

Thanks to our collective efforts and professionalism, we managed to exceed the client’s requirements and pass all the client’s QA reports with a 99%+quality score only after the first round of annotation. It is worth mentioning that we were able to achieve this score on the first try without having to redo any of the work. The client was so impressed by these results that after the first batch, they were so satisfied with the results that they decided to expand the project for an additional six months and awarded us with additional projects.

Key Results

- 99%+ quality score without any rework

- 10,000 items of data annotated

- Completed annotation in 13 languages

- 7 full-time team members

GET A QUOTE FOR YOUR PROJECT

We have a minimum threshold for starting any new project, which is 735 productive man-hours a month (equivalent to 5 graphic annotators working on the task monthly).